Commons:IA books

Overview

[edit]As of 24 August 2021, 1,471,948 documents with a total size of 21 terabytes have been uploaded as part of the IA books project. This upload is unaffiliated to any organization, and funding to support this project has been nil.

See Village Pump July 2020 for the community notice. This now extremely large book scan upload project started out as a modest request to upload 600 volumes from the Internet Archive (IA).

The same code was extended and reused to upload other types of public domain and old (before 1925 or 1900) book scans from IA that are in easy to list 'collections', with on-going reworking to make it faster and robust against upload failures. Original talk page request discussion diff in June 2020. Phabricator report on the errors and sustained failure to upload very large PDFs to Commons: Phab:T254459 Large PDF upload issue

See also: Commons:IA audio

- Sampler

-

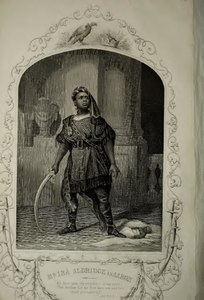

Illustration for Anglo-African magazine, 1859

-

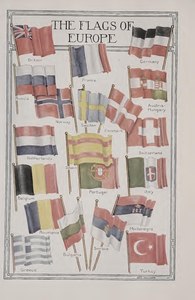

The world book, 1917

-

Example Catalogs of Copyright Entries volume, displaying page 11 of the 122MB, 1,400+ pages long PDF

-

Illustration in "The Story World" (1923), fork to import LoC Packard Campus magazine scans published before 1925

-

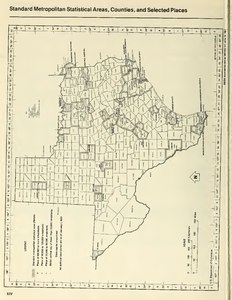

Map in Census of Housing, 1980 from Census Bureau Library

-

Cartoons and caricatures, Zimmerman, 1910

-

British Butterflies, 1860

-

Abendpost 1889, Deutschsprachige Zeitung, wurde aber in Amerika veröffentlicht

Useful stuff

[edit]Example searches

[edit]- incategory:Scans_from_the_Internet_Archive hastemplate:language filemime:pdf insource:/language.1=la\}/ books in latin

Analysis categories

[edit]These categories have been created for maintenance or interest, providing some alternative ways of finding different types of PDF in the collection.

- Category:IA mirror related deletion requests

- Category:IA books copyright review automatically suggested

- Category:IA books of at least 500 pages, Category:IA books documents with one page

- Category:Internet Archive index categories

- Category:IA books harmonization

- Category:Scans from the Internet Archive marked public domain

Configuration and technical

[edit]The uploads are all added to Category:Catalogs of Copyright Entries though may be later moved to subcategories.

The file names are in the format:

File:<title> (IA <IA ident>).pdf

The upload comment for later files links to this page and includes the numbers of retries that the upload took on that run, though this does not count attempted failed uploads on previous runs. These later uploads also show a 'batch' number, which is the index in the IA search which returns 674 documents. In the case of IA catalogofcopyrig313libr (230.75 MB) this shows the upload took two attempts, and in practice the first failed attempt took an hour for the failure to time-out. In the second case of File:Katalog und garten buch für 1909 - Samen, Pflanzen und Baume (IA CAT31290288).pdf (1.18 GB) the upload took seven attempts but was using a later 'time-boxed' approach which forces a times out for an upload attempt from 3 minutes to a maximum of 15 minutes for each try:

Copyright records from the Internet Archive catalogofcopyrig313libr (User talk:Fæ/CCE volumes) (retry 2) (batch #441)

Henry G. Gilbert Nursery and Seed Trade Catalog Collection CAT31290288 (User talk:Fæ/CCE volumes#Forks) (retry 7) (batch #1650)

The title is taken from the IA metadata. If more than 180 characters long, it defaults to the name of the collection. For example File:John Adams Library (IA actsofgeneralass00penn).pdf has a title of "Acts of the General Assembly of the commonwealth of Pennsylvania : passed at a session, which was begun and held at the borough of Lancaster, on Tuesday the first day of December, in the year of our Lord one thousand eight hundred and one .." for which an automatic rule to drop words at the end, or to split at punctuation, would probably work unpredictably badly in many cases. Files created with the default name are easily searched for, and the {{rename}} request template can be applied if helpful, keeping the IA identity at the end of the filename.

A suggested structure for manual renames (under Criterion 2) is to rename to <short-title> (<surname>, <year>) (IA <IA ident>).pdf.

- Categories

Categories are not added by analysis at upload, instead only the main relevant (bucket) category will be added. Tools like VFC or Cat-a-lot can be usefully applied to searches like incategory:"United States Congressional Hearing Documents" North Korea and the results copied to additional categories.

Files use category keys of the IA identity, so images with similar IA idents should be clustered in a category regardless of specific filenames.

- Duplicates

Potential duplicates are tested for by running a Commons hash check (sha1 value) for files that are digitally identical to the IA hash. This avoids any downloading of files.

- Format

PDF (colour) was chosen as a preferred format, though the files are hosted at IA in plain text, ePub and other formats. Many of these files have black & white PDF alternates, but these appear overly grainy compared to the colour scans.

Copyright

[edit]A license of {{PD-USGov}} has been applied to the CCE volumes as these Library of Congress publications are made under a US Federal budget, though this is a generic choice.

Later forks which may not be USGov works, are constrained by date, and a license of {{PD-US-expired}} is applied.

Software

[edit]The upload script has been written using Pywikibot under Python 2 using Selenium to scrape the source list and metadata as a quick solution forking another upload project. This runs on a very old laptop using Ubuntu and relies on direct upload by WMF servers from the Internet Archive rather than caching these large files locally.

Due to Phab:T254459, larger PDFs whether successfully uploaded or not, were taking ~70 minutes to time-out the standard upload call, due to multiple retries. A further refinement of the code used multiprocessing.Process to run the upload in a separate processing thread, with a set time-out starting at 3 minutes. Repeated upload attempts increased the time-out.

A second re-write eliminated the use of Selenium and instead adopted the Python internetarchive module to use direct API calls. {{Book}} started to be used for these collections. A significant improvement was to pull the file sha1 value from IA, which is available in the metadata without having to download the file. Consequently the Pywikibot generator of the type site.allimages(sha1=sha1,total=1) (ref API:Allimages) provide a quick way of testing if a digitally identical file exists under another filename on Commons.

A third re-write was to speed up the process by moving the time-boxed upload loop into a function, then using it immediately after IA selection, checking of prior existence and generation of the Commons text page for a short (40s) time-box upload and then only putting that image in a repeated upload array if it fails. This also cuts down on holding a large array in memory, starts uploading immediately when launched, and has the benefit of being able to run through IA search returns larger than 10,000* at a time, e.g. a Biodiversity Heritage Library search for 1801-1850 returns 43,231 files to check and less than ~15% may need a longer time-box to complete upload or be un-uploadable.

- * - 10,000 is the maximum array size for Python

Bugs and work-arounds

[edit]Large files like CAT31291273 (1 GB) may have multiple upload attempts (10 in this case) but will be abandoned as impossible to upload due to unexplained WMF server or API bugs seen for some files which are from around 180 MB upwards. This is an unpredictable bug, successful large file upload exceptions can be found with search for files > 1¼ GB and example files taking multiple upload attempts can be found at quarry:query/46234.

R42995AnOverviewoftheHousingFinanceSystemintheUnitedStates-crs is a duplicate on Internet Archive of IA_R42995-crs. Though the sha1 test would pick up this duplicate, the upload was still automatically attempted, probably because the database the API references for all sha1 values was lagging by longer than the time after the first upload, which was over 12 hours. This is an apparent bug on the IA databases, as there appears to be a failure to stop digitally identical files being added to the archives.

An experimental work-around was phab:T264529. This bypassed the normal upload processes by saving the file directly on the WMF maintenance server. The document file size is 2.8GB, however it may remain unreadable on the Commons website, with no thumbnails being created, even if it can be downloaded by reusers, see File:Chronological history of plants (IA 0399CHRO).pdf.

Many files were unnecessarily huge. They usually do not display properly on Commons:

- File:Müller - Elements of physiology (IA Muller1838na12J-a).pdf (1.67 GB instead of 561.11 MB)

- File:A manual of elementary geology (IA Lyell1855ya42W MS).pdf (1.82 MB instead of 65.55 MB)

- File:Asa Gray correspondence (IA asagraycorrespo00grayj).pdf (1.48 GB instead of 51.2 MB)

- File:Flora Brasiliensis (IA mobot31753002771134).pdf (1.25 GB instead of 60.94 MB)

- File:Carpenter - Principles of comparative physiology, 1854.pdf (1,628 MB instead of 82 MB)

- File:Journal d'horticulture pratique de la Belgique, 1852 (CAT31309742009).pdf (1,658 MB instead of 408 MB)

- File:B.K. Bliss and Son's illustrated spring catalogue and amateur's guide to the flower and kitchen garden (cat31348279).pdf (1,794 MB instead of 451 MB)

- File:A Greek-English Lexicon, 1883 (cu31924021605831).pdf (1,867 MB instead of 248.73 MB)

- File:A Greek-English lexicon, 1897 (cu31924012909697).pdf (1,892 MB instead of 248.45 MB)

- File:British Medical Journal, 1857 (1909britishmedic02brit).pdf (1,854 MB instead of 320 MB)

- File:Journal d'horticulture pratique de la Belgique, 1845 (cat31309742002).pdf (1,686.7 MB instead of 417.87 MB)

Files uploaded without image text pages is a relatively rare error, thought to be due to a long term (unexplained) bug with WMF server failures. The text page is correctly posted with the file but never gets created. Examples of post-upload page recovery are at Category:IA uploads needing categories. A later revision of the upload script checks for the existence of FileVersionHistory (file exists) when pageid == 0 (display page does not exist) after upload attempts, and posts the text page for a second time (this has never worked).

Phabricator tickets

[edit]- 2020 Jun 04 Phab:T254459 Large PDF upload issue

- 2020 Oct 04 Phab:T264529 Large file upload request for 0399CHRO

Forks

[edit]Based on the scripts developed for the CCE volumes, forks for other collections are:

Forks 0-20

[edit]- Some of these publications will have illustrations that are not NCI works, and may later need to have pages removed from the PDF if the rest of the volume stays available.

- Though part of the FEDLINK collections, they are not federal works. In this case a filter of 'before 1925' is applied so that {{PD-US-expired}} can be used automatically. Later volumes may still be public domain as copyright has not been registered, but this would take manual intervention. Due to the necessary filtering out of the original 1,000+ volumes only around 200 are uploaded.

- 20,577 R Henry G. Gilbert Nursery and Seed Trade Catalog Collection ← usda-nurseryandseedcatalog

Done

Done

- 3,300 R United States Census Bureau publications ← CensusBureauLibrary

Done

Done

- Many of these documents were first released on WikiLeaks and have a cover page with a large watermark. The cover page may have an abstract and references that add information that may be lost if the page is deleted from the PDF.

- 150,145 R Books in the Library of Congress ← library_of_congress

Done

Done

- 58,820 R Books from the Biodiversity Heritage Library ← biodiversity

Done

Done

- Several large files in this collection like CAT10988176007 (1.785 GB) "Herbier général de l'amateur" cannot be uploaded at the current time regardless of attempts at work-arounds, refer to bugs above

- 2,964 R BHL Field Notes Project ← fieldnotesclir2016

Done

Done

- Housekeeping is needed on this collection as many are from the 1960s, but appear to be from US Federal projects and need a better PD-USGov license. Example ref.

- 168,949 R FEDLINK - United States Federal Collection ← fedlink

Done

Done

- <= 1895 added as {{PD-old-100-expired}}

- >= 1970 added as {{PD-USGov}}

- Documents in-between are not being added at this time

- 1,508 R US Navy Bureau of Medicine and Surgery Office of Medical History ← usnavybumedhistoryoffice (all should be PD-USGov)

Done

Done

- 152,481 R Medical Heritage Library ← medicalheritagelibrary

Done

Done

- 3,207 R John Adams Library ← johnadamsBPL

Done

Done

- 695 R California Academy of Sciences ← calacademy

Done

Done

- 3,081 R Taxonomy Archive ← taxonomyarchive

Done

Done

- 9,401 R Boston Public Library Anti-Slavery Collection ← bplscas

Done

Done

- 6,879 R University of Michigan Books ← michigan_books

Done

Done

Forks 20+

[edit]

- 157,940 R California Digital Library ← cdl (CDL supports the Univ of Cal libraries)

- 3,257 R Scans from MBLWHOI Library ← MBLWHOI

- Some overlap with BHL uploads (CUbiodiversity), previously uploaded. A publisher test is included with this fork, so that files published after 1899 outside of an obvious US publisher are excluded.

- Files published after 1899 outside of an obvious US publisher are excluded.

- Breadcrumbs

- Testing for US publishers is only an issue for publications between 1899-1925 where the publisher might be outside the US. The filter to check for US publishing locations or US publishers is (where "!" = ","):

USlocs = "Wash.,Ala.,Ore.,Mass.,N.Y.,Va.,Minn.,Conn.,Cal.,Francisco,\

New York,Boston,Albany,Washington,Philadelphia,Chicago,Akron\

Charles Scribner,D.C. Heath,American Book,\

Rand McNally,Mifflion,Scott. Foresman,Scott Foresman,\

Century Co,Bobbs.Merrill,Appleton &,Doub &,\

Charles E. Merrill,Moore & Langen,W.W. Howe,John Wiley,\

The University Publishing,Benj. H. Sanborn,Henry Holt,\

Little! Brown,American educational,A.L. Burt,Gregg Publishing,\

Laidlaw Brothers,A.S. Barnes,Atkinson! Mentzer,P. Blakiston,\

Saalfield,Richard G. Badger,Sanborn,G.P. Putnam,John C. Winston\

"

Queries

[edit]As a supplement to planned forks, a generalized IA advance query formatted search can be used. For example:

creator:(Egypt Exploration Society) AND date:[1000-01-01 TO 1900-12-31]

Using the IA advance search, this provides 45 document matches, though without a date restriction this would have been 86 documents. A default license of {{PD-old-100-expired}} is applied unless overridden. In the above example a bucket category of Category:Egypt Exploration Society was added as a command line option when running the batch uploads. If there is date parameter, a year range of 1000 to 1900 is the default.

- sponsor:(Sloan) date:[1000 TO 1925] publisher:((New York) OR Chicago OR Jersey OR Illan)

- Though most Sloan sponsored scans had been uploaded, this query provided a way of finding only New York publications, so that {{PD-US-expired}} could apply for documents between 1900 to 1925. These were placed in Category:Scans sponsored by Sloan Foundation.

- mediatype:(texts) date:[1000 TO 1900] rights:((public domain))

- Older documents with PD asserted at IA, but missed with other upload criteria, populating Category:Scans from the Internet Archive marked public domain.

- creator:(smithsonian) date:[1000 TO 1925] mediatype:(texts)

- collection:(universallibrary) date:[1000 TO 1900]"

- Category:Universal Library Project, uploads which were part of the early IA projects.

- collection:(pub_economist) date:[1840 TO 1879] & collection:(pub_economist) date:[1880 TO 1890]

- Category:Scans of The Economist, applies PD-old-70 to pre-1880 and PD-old-assumed to 1880-1890. Limiting to 1890 as a conservative approach to copyright. PD-US-expired added as secondary template.

- collection:(americana) date:[1000 TO 1869]

- Category:Old books from American Libraries, 1869 has been selected as a highly conservative date before which copyright will have expired for almost all works world wide.

Others

[edit]- Board of Trade Journal (post-1925 are loan-only)

- Billboard

- Examiner

- Federal Register

- Editor & Publisher

- The engineering and mining journal

- Evangelist and Religious Review

- Civil Service Journal

- State Magazine

- Congressional Record

- Bulletin of the Pan American Union

- Statistical Reporter

- Family Planning Digest

- Scans from Trent University Library

- Narberth Civic Association

- California Revealed

- Clinical Medicine

- The Rollins Sandspur

- Bentley's Miscellany

- The Biblical World

- Botanicus

- Federal Reserve Bank of New York Monthly Review

- Old books in Internet Archive additional collections

- Paper Trade Journal

- Country Life

- Gaylord Music Library

- Newman Numismatic Portal

- State Medical Society Journals

- Harvard botany libraries

- Aruba newspapers and periodicals

- Victoria Daily Times

- Women of the Book

- Scans from University of Turin

- Bharat Ek Khoj

- Public Library of India

- Charlotte Mason Digital Collection

- Ballards published in Wales or in Welsh from the Internet Archive

- Cardiff University Special Collections and Archives

- Newspapers in Italian

- William C. Wonders Map Collection

- The CIHM Monograph Collection

- Government of Alberta Publications Collection

An example failure is Category:Engineering News-Record, where hundreds of the earliest scans exist, but have not been transcoded in to PDF or DjVu downloads. Worth repeating is the fact that large PDFs will consistently fail to upload via the WMF servers, for example Federal Register vol 1, 1-121 (1936) is 639MB.

Suggestions for Collection Mirrors

[edit]Please add suggested collections at the end of table, but before adding a collection please confirm it contains materials that are compatible with Commons licensing. Please note in the licensing section if there are works that should be excluded (such as works after a certain date.) Some completed suggestions are hidden, but can be read in the wikitext.

| Collection | Summary | IA link | RSS or possible addittional query links (if known) | Number of items | Licensing (if known) | Proposed for "mirroring" by | Status |

|---|---|---|---|---|---|---|---|

| Congressional Research Service | Reports and data produced by the Congressional Resarch Service,( see also: https://archive.org/details/congressional-research-service?tab=about) | https://archive.org/details/congressional-research-service | 9515 | Works of a department, supporting agency or office of the US Congress, so potentially PD-US Gov? | ShakespeareFan00 (talk) 11:14, 19 June 2020 (UTC) | Complete, but see | |

| Abendpost & Sonntagpost 1889-1979 | An American newspaper published in German, from microfilm | https://archive.org/details/pub_abendpost-sonntagpost

collection:(pub_abendpost-sonntagpost) date:[1700 TO 1925]gives 10,925 matches |

~11000 | There's no scans between 1921 and 1977, and the in-copyright ones can't be downloaded. | Prosfilaes (talk) 23:47, 16 December 2020 (UTC) | Completed | |

| American Federation of Labor. Proceedings 1881-1955 | AFL proceedings until they merged with into AFL-CIO | https://archive.org/details/pub_american-federation-of-labor-proceedings | 135 | No evidence of renewal of this US work. Full view up to 1955. | Prosfilaes (talk) 00:46, 17 December 2020 (UTC) | Completed | |

| American Red Cross Youth News 1919-1975 | A magazine for kids with stories and lots of pictures. | https://archive.org/details/pub_american-red-cross-youth-news | ~65 | No evidence of renewal of complete issues of this US work. But John Mark Ockerbloom reports contributions renewed starting 1927, and a quick glance reveals material copied from other publications with their copyright noted, so unless someone wants to clean each issue, up to 1925. | Prosfilaes (talk) 00:46, 17 December 2020 (UTC) | Completed Category:American Red Cross Youth News | |

| Pulp Magazine Collection | Various pulp magazines | https://archive.org/details/pulpmagazinearchive collection:(pulpmagazinearchive) date:[1700 TO 1925] |

521 | The collection as a whole has a lot of questionable/clearly copyrighted works, but I've eyeballed all that query brings up and all seem to be PD for Commons, with the few pre-1925 non-American works all seem pre-1900. | Prosfilaes (talk) 02:42, 13 January 2021 (UTC) | Completed Category:Pulp magazines | |

| 19th Century Novels | 19th Century Novels | https://archive.org/details/19thcennov | 7,823 | Would it be possible to do it in the format %title% (%date% Volume %volume%) or %title% (%date%) to make it easier to import into Wikisource? Could you also post a list of titles to my userpage if you do this so that I can batch import them into Wikisource? A million thanks. | Languageseeker (talk) 02:44, 7 April 2021 (UTC) | ||

| University of Toronto | Various High Quality Scans. One of the best resources on IA. | https://archive.org/details/university_of_toronto | >730,000 | Languageseeker (talk) 14:49, 7 April 2021 (UTC) | |||

| University of California Libraries | Various High Quality Scans. One of the best resources on IA. | https://archive.org/details/university_of_california_libraries | >390,000 | Languageseeker (talk) 14:49, 7 April 2021 (UTC) | |||

| John Carter Brown Library | Various High Quality Scans of rare books from 1400 - 1825. One of the best resources on IA. | https://archive.org/details/JohnCarterBrownLibrary | 16,332 | Languageseeker (talk) 14:49, 7 April 2021 (UTC) | |||

| The Lincoln Financial Foundation Collection | Various High Quality Scans. One of the best resources on IA. | https://archive.org/details/lincolncollection | 15,217 | Languageseeker (talk) 14:49, 7 April 2021 (UTC) | Lincoln Financial Foundation Collection | ||

| John Geraghty uploads | 34 super rare books uploaded by a private collector | https://archive.org/search.php?query=creator%3A%22John+Geraghty%22 | Category:Book collection of John Geraghty | 34 | Languageseeker (talk) 15:05, 9 April 2021 (UTC) | ||

| Dickens Journal online | Complete runs of 4 periodicals edited by Charles Dickens in the 1850s | https://archive.org/details/djo | Category:All the Year Round | 51 | Languageseeker (talk) 05:18, 13 April 2021 (UTC) | ||

| The Victorian Collection | Rare printings of Victorian novels | https://archive.org/details/victorianbrighamyounguniv | 1,194 | Languageseeker (talk) 23:52, 13 April 2021 (UTC) | |||

| Boston Public Library | High Quality scans including many rare books | https://archive.org/details/bostonpubliclibrary | 64,191 | Languageseeker (talk) 01:30, 16 April 2021 (UTC) |

Suggested "diffusion" or "dispersal" categorisations for some collections...

[edit]| IA Collection | Commons category (subcat) |

|---|---|

| usdanationalagriculturallibrary | Category:Documents_from_the_U.S._Department_of_Agriculture,_National_Agricultural_Library |

| fdlpec | Category:Documents from the FEDLINK-U.S. Government Printing Office collection |

| blmlibrary | Category:Documents from the US Bureau of Land Management Library |

| library_of_congress | Category:Books from the Library of Congress |

| NISTresearchlibrary | Category:Documents from the NIST Research Library |

| cmslibrary | Category:Documents from the Centers for Medicare & Medicaid Services (CMS) Library |

| nihlibrary | Category:Documents from the US National Institutes of Health Library |

This table could be expanded though, and I see that portions of it may already under consideration during the batch upload process. ShakespeareFan00 (talk) 13:01, 26 June 2020 (UTC)

Catalog errors

[edit]Any concerns as to major errors (such as the metadata being for the wrong work) or omissions (such as missing author lifetimes, titles and authorship details) in the meta data, should be noted on the talk page for the document concerned, corrections can be made directly, but leave an appropriate edit summary or talk page comment.

If looking through any of the works uploaded in this project, potential copyright violations or documents incompatible with Commons licensing are uncovered please consider filing a detailed Deletion Request (ideally of grouped items) for the documents concerned. Include <noinclude>[[Category: IA mirror related deletion requests]]</noinclude>, in the DR so that they can be tracked, and if needed external providers also advised.

Metadata

[edit]It would be nice to use {{Internet Archive link}} instead of the bare URL to the detail page, so that it's easier to have a list of all such uploads later. Nemo 07:58, 1 July 2020 (UTC)

- Now being adopted as the standard. Housekeeping is being applied to prior files.

Housekeeping

[edit]Language

[edit]Many books have the language identified in the metadata. This varies in format, so being in French may be shown as fre, French, or FRE.

Some later uploads are having the {{language}} template applied to this parameter, which entails identifying the IA metadata against the matching Wikimedia accepted two-letter code (which is inconsistent with any single external standard). The advantage of this template is that a reader will see the language name in their local language settings, so instead of "spa", they may read "Español". Codes used by language appear governed by Names.php, ref VPT discussion.

For categorization purposes, this has the advantage of the collection being consistently searchable by language. Example search for books in Latin, Spanish, German, Yiddish.

Mapping used by automated housekeeping [<commons lang code>, <regex matching IA varied metadata language codes>]:

["ar","ara|arabic"],

["gez", "eth|ethiopic"],

["el","gre|greek"],

["haw","haw|hawaiian"],

["he","heb|hebrew"],

["yi","yid|yiddish"],

["it","ita|italian"],

["nl","dut|dutch"],

["pl","pol|polish"],

["pt","por|portuguese"],

["la","lat|latin"],

["ru","rus|russian"],

["de","ger|german"],

["es","spa|spanish"],

["fr","fre|french"],

["zh","chi|chinese"],

["en","eng|english"],

["cat","ca|catalan"],

Harmonization with 2015 Flickr uploads

[edit]In 2015 an upload project pulled in illustration pages from books from the Internet Archive Flickrstream. A semi-automated and very slow housekeeping task is examining those uploads and checking to see if the associated IA PDF has been uploaded. If not, then the script attempts to upload the PDF and if necessary create a parent category so that the extracted images or page images are shown alongside the new PDF of the book.

This harmonization task attempts to find an existing reasonable looking parent category used in all or at least 10 existing image uploads, and if not found will create one, and retrospectively add it to the images matched in a search.

In a second scenario, where a pdf is found that matches the IA identity of the Flickr uploads, it checks to see if there is a single common category for all the images, and adds this to the pdf. Most often this will be a suitable book category, but in some cases it may be slight more generic but still relevant.

Due to variation in category names, original book titles and how image pages may have been changed over the years, this housekeeping task is not exhaustive nor fully reliable. Due to the large pdf WMF server bug, source pdfs over 200MB are unlikely to succeed as upload attempts are limited to a maximum upload attempt of 30 minutes.

{{LicenseReview}} is added to uploaded pdfs with the year given as after 1924, encouraging a manual check. This does not imply that pre-1924 dated uploads are confirmed as public domain, in particular serial runs of publications are a known date issue for the Internet Archive.

Examples:

- Category:A critical revision of the genus Eucalyptus, Volume 2

- Category:Internet Archive document gri 33125009339611

- Category:Internet Archive document AbbildungenundBVIPoto

- Category:Around the circle (1892) (retrospectively added to a pdf)

Affected PDFs are added to Category:IA books harmonization.

If in examining an upload it is found that the images for a given scan (or set of scans) are in a specific category which contains the images for that work (such as all the images from a Flikr set), and the scanned work in whole (as PDF or DJVU). then add the category: Category:IA books harmonization/manual to the file information pages for the PDF file, so that others are able to review the work and associated images more easily.

The 'harmonization' process, has also uncovered image sets uploaded in good faith, which based on subsequent information such as review of metadata or information in the scans, are still in copyright, or incompatible with Commons licensing. However, as not all images derived in a given PDF or Djvu scan may be problematic, as has been shown in related DR's, a speedy deletion (for copyvio) of all images in a given 'work' category would not be appropriate, especially for works that are dated prior to 1926. If you identify problematic images in 'harmonized' work, please raise a DR for both the PDF/Djvu scan and any relevant images that are affected.

Automatic detection and deletion of cover pages

[edit]

Archived document cover pages

[edit]A significant number of PDFs have a "Historic, archived document" page added to the front of the scanned book. These can be added to Category:Internet Archive (redundant cover page) where an automated task will attempt to delete the first two pages of the file. The script uses PyPDF2 to edit the PDFs.

On stashfailed error, the backlog category is removed and the file left unchanged, on the basis that more attempts will fail API upload, ref mw:API:Upload#Possible_errors. The process will re-attempt if the category is added back after this failure.

Up to 3 upload attempts in succession are made before moving to the next file. Non-fatal errors seen include: uploadstash-file-not-found, PdfReadWarning: Xref table not zero-indexed.

A different slow housekeeping process which takes about 4 seconds per file, using pytesseract, can be run for a given category which opens the 800px wide thumbnail of the first page of each PDF in the category, and checks if the first line of the page text can be resolved through optical character recognition to match "Historic, archived document" or "Historic, Archive Document" (eg. variation). Matches are added to Category:OCR detected cover page which then relies on human intervention to double check results and move to Internet Archive (redundant cover page). In the case of an 80,000 file category, this would take nearly 4 days presuming no outages.

Where the PDF has a cover page, but only one page of real content, this processing generates an error by creating a PDF with no pages. For these rare instances a later housekeeping task rolls back to the original version and with the presumption that the cover page is still unwanted, trims off just the one physical page rather than two. The results are odd PDFs which contain just one page, so theoretically could be replaced with flat image versions. Faulty documents can be found using incategory:Internet_Archive_(redundant_cover_page_removed) filesize:<20 filemime:pdf and corrected pages are found in Category:IA books documents with one page.

Pure white cover pages

[edit]

During the population of Category:Old books from American Libraries, many files were discovered with one or more white blank cover pages. As these are literally pure white blanks, they can be automatically detected. A manually launched slow routine runs through this category, examines the first page of all PDFs not already trimmed, and uses a local download/upload process to delete blank initial pages, resulting in a usable cover to generate better thumbnails and category listings.

Breadcrumbs: This uses pywikibot to generate a tiny 8 pixel wide thumbnail of the first page, therefore fast to download and efficient to eliminate the majority of prospects, and using PIL ImageState.Stat().extrema to test that the pixels are pure white with zero variation, then if a match goes on to check a 200px wide version. This then iterates through initial pages up to a maximum of 7, which are scheduled for processing via PyPDF2. A key disadvantage is that PDFs may be large, and therefore slow to handle, further the storage is on local disk, which reduces hardware life for these long running housekeeping jobs.

Google and JSTOR cover pages

[edit]

- Category:Internet Archive (Google cover page removed)

- Category:Internet Archive (JSTOR cover page removed)

IA PDFs with "goog" ending the identity appear consistently to have a standard Google cover page. The cover page is undesirable, both because it gets rendered as an ugly thumbnail in Categories and transclusions, but also because it states Make non-commercial use of the files. We designed Google Book Search for use by individuals, and we request that you use these files for personal, non-commercial purposes. which is misleading in the context of Wikimedia Commons filtering what gets hosted so that only public domain books will be in the collection. As a good digital citizen, this project also ensures that Google is credited on the Commons image page as the digitization sponsor, and the version with the cover page is visible in the displayed file history for anyone that wishes to check it or reuse the original scan.

By reading the file metadata on Commons, efficient targeting of files with these cover pages can be made by matching 'carefully scanned by Google' or 'Das "Wasserzeichen" von Google' or 'Google.*?books.google.com' as various alternate language versions exist. Only the first matching pages are removed from the file, with no examination of what may be on later pages. Though scanning is done by reading metadata alone, the page deletion itself required local download and so is slow and processing intensive, though unfortunately as it is dependent on non-standard modules cannot be run on the WMF cloud. Once Google cover pages are removed, later pure white blank pages may be detected by separate semi-automated housekeeping and further trimmed.

Some language versions of the Google cover page have no associated embedded text in the PDF, possibly because the scanning technology failed to process the language, such as in Cyrillic. Only the pages with correct OCR text available will be removed as the text filter will find no match.

The first use of this removal method was for PDFs in Category:American Book Company.

Note that the scans may in addition have a distracting Google watermark on every page. At the current time there's no realistic way of removing these damaging watermarks.

In rare cases a Google cover page has been added after the book cover. In these instances the book cover will be deleted.

From March 2021, JSTOR cover pages were included in the same script. JSTOR themselves appear to have released a selection of "Early Journal Content" to the Internet Archive in 2013 (ref jstor_ejc), but the cover pages have the confusing claim People may post this content online or redistribute in any way for non-commercial purposes when the materials are public domain by age and can be licensed accordingly. A check of an exemplar file (published in 1802), shows that at source JSTOR states This item is openly available as part of an Open JSTOR Collection but makes no direct claim about copyright. Similarly on the Internet Archive there is no rights statement, nor any direct claim of copyright. As evidence of a reasonable search, these facts are sufficient to show that potential copyright claims have been respected and analyzed in good faith at the time of upload to Wikimedia Commons.

The JSTOR logo appears on the cover page, this appears to be trademarked but no copyright claim has been made on the JSTOR website, refer to logos use permissions. In a private message, JSTOR has stated "Yes, we do have rights to the JSTOR logo as our intellectual property" (March 2021). Deleting the cover page removes any ambiguity.

For JSTOR released PDFs, the cover page appears to have been generated by printing a Word document. Once removed, there is no useful metadata embedded in the file to inherit by the remaining pages consequently only a standard PyPDF2 summary remains.

Detecting country of publication

[edit]There is no obvious consistent way of detecting the first country of publication for a scanned book.

The publisher(s) is normally named in the IA metadata, and these can be partly detected by publication city in that publisher text. For example "London : Longman & E. Spettigue". A part of the IA housekeeping script has been added which searches the publisher parameter and adds a relevant category. The matching array looks like:

# Category, insource match

['Books published in Brussels','"publisher = .?Bruxelles"'],

['Books published in London', '"publisher = .?London"'],

['Books published in Edinburgh', '"publisher = .?Edinburgh"'],

['Books published in Boston', '"publisher = .?Boston"'],

['Books published in Oshawa', '"publisher = .?Oshawa"'],

['Books from New York (state)', '"publisher = New York"'],

['Books from New York (state)', '/publisher = .*New York/'],

['Books from New York (state)', '/publisher = .*N\.Y\./'],

['Books published in San Francisco', '/publisher = .*San Francisco/'],

This categorization can be used to deduce whether copyright templates need improvement:

- (good) hastemplate:PD-old-100-expired incategory:"Books published in London"

- (bad) hastemplate:PD-USGov incategory:"Books published in London"

PDFs which are in a subcategory of the city category are skipped. Files with multiple claims like 'Paris, London & New York' will be matched to the first close text match. This is not great, but it's not wrong.

Automatic detection of possible copyright issues

[edit]This is an experimental housekeeping task.

The intention is to place pdfs in Category:IA books copyright review automatically suggested when the OCR text has some fairly obvious copyright claims in it, that do not otherwise meet expectations or are irrelevant due to age.

The search regular expression is:

©|copyright|all rights reserved|Hellenic Navy

Up to three sample page numbers within the pdf and the sentence(s) matches are in, are included in the edit comment for reference when the automatic categorization is made. Page numbers are those given by the Internet Archive, with 1 added which appears to then match the display on Commons. Example diff.

Arbitrarily the search stops at page 125, this could be changed to the whole book if thought beneficial.

Edit comments look like:

IA books copyright flag text found at: Page 115: Copyright 1992, 1993 Cascade Design Automation CorJXlration . | Page 118: Copyright 1992, 1993 Cascade Design Automation Corporation. | Page 107: Copyright 1992, 1993 Cascade Design Automation Corporation.; Copyright 1992, 1993 Cascade Design Automation Corporation.

- Technical breadcrumbs

- This bot task searches the IA OCR text that is embedded in metadata fields rather than the pdf itself. As a result, a Commons API query via Pywikibot can return all pages that have OCR texts with that text content, without going to the transactional or processing "expense" of downloading each pdf to examine it. In a Pywikibot ImagePage object, this is found by examining page.latest_file_info.metadata. One of the name/value pairs should be text, and this text then contains more name/value pairs which are pdf page numbers assigned by IA, and the OCR text for that page. It is unknown how consistent this way of creating the file metadata is for other IA pdf collections, or how normal this is for other pdf collections on Commons from other sources. As OCR is never 100% accurate, and this is a non-fuzzy non-smart filter, the success in finding copyright problems is expected to be something like 80% good. On human review, it's quite likely to find matches like "not copyrighted" in the text, and with the goal of focusing where human review is invested, this provides an aid to finding copyvios, not a solution. This task relies on a category query, which has to be throttled (pywikibot.config.step = 32) per Phab:T255981.

Example residual list

[edit]These are a sample of files that may be useful to diagnose why some PDF documents fail a dozen or more attempts to upload and have not been uploaded so far. These are relatively rare in the selected collections, of a magnitude of 1/1000.

- IA 15 MB, 1747, Polyhistor, literarius, philosophicus et practicus MHL File:Polyhistor, literarius, philosophicus et practicus (IA b30526267).pdf

- IA 43 MB, 1677, A large dictionary MHL File:A large dictionary. In three parts- I. The English before the Latin ... II. The Latin before the English ... III. The proper names of persons, places (IA b30324476).pdf

- IA 96 MB, 1989, Use of naval force in crises FED File:Bouchard - Use of naval force in crises, a theory of stratified crisis interaction.pdf

- IA 165 MB, 1872, Encyclopædia of chronology LOC File:Encyclopædia of chronology, 1872.djvu

- IA 1,750 MB, 1866, The ferns of British India BHL File:The Ferns of British India, 1866.pdf

Further examples at Commons:IA books/residuals.

Exemplar deletion requests

[edit]- Commons:Deletion requests/West-Riding Consolidated Naturalists' Society

- The earliest volumes in The Naturalist are 19th C. but more recent volumes go through to the 21st C. and are not public domain by age. Unfortunately the IA metadata is misleading, giving the default year against each document as the first year of publication of the journal, not the volume.

- Commons:Deletion requests/Files found with insource:reserved by the copyright owner incategory:FEDLINK - United States Federal Collection

- A significant number of documents have a rights statement of copyright reserved which often is confirmed when examining the document, in other cases it may be less clear.

- Commons:Deletion requests/Files in Category:An account of the genus Sedum as found in cultivation (1967)

- This is the RHS journal, retrospectively uploaded as part of harmonization, though in this case resulted in questioning the past Flickr releases.