File:Earth like planet temperature if distance 1p03 au 1 1.png

Original file (1,210 × 710 pixels, file size: 520 KB, MIME type: image/png)

Captions

Captions

Summary

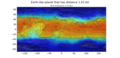

[edit]| DescriptionEarth like planet temperature if distance 1p03 au 1 1.png |

English: Temperature of Earth-like planet if its distance is 1.03 AU |

| Date | |

| Source | Own work |

| Author | Merikanto |

This image is based on Rduplanet simulation and

diku map.

Eduplanet ran ca 10000 rounds. Perihelion 1.02, aphelion 1.04

Eduplanet

Aymeric Spiga

an additional layer which permits a student-friendly use of the LMD generic climate model

https://github.com/aymeric-spiga/eduplanet

Diku Planet Map generator

https://topps.diku.dk/torbenm/maps.msp

Map seed 1, wrinky

Diku map to eduplanet map ... Python script

-

- make image file to eduplanet input map

-

- NOTE: needs one original eduplanet input datafile surface_earth.nc, rename it

-

- albedo, thermal, zMOL

- uses Python 3, numpy, math, matplotlib, scipy, skimage, netCDF4 xarray, PIL, imageio

-

- 05.07.2024 0000.0005

import matplotlib.pyplot as plt

import numpy as np

from scipy.interpolate import interp2d

from skimage.transform import resize

import skimage.transform

- from mpl_toolkits.basemap import shiftgrid

from matplotlib.colors import LightSource

import math as math

import netCDF4 as nc

import xarray as xr

from PIL import Image

from PIL import Image, ImageFilter

from imageio import imwrite

import imageio

- some props of planet!

au=1.03

radius=1

nlats=180

nlons=360

- iname1="./maps1/testmap1.bmp"

iname1="./maps1/Map-1W.bmp"

- you neet some original eduplanet surface to xarray() sabluna

original_surface_earth_name1="./origdata1/surface_earth_origo.nc"

inputsurfacename1="surface00.nc"

outputsurfacename1="surface_earth.nc"

sealevel1=0.46 ## sea level in input dem

exponent1=1 ##if creater, mountains smaller

maxheight1=8200*1.9 # delta h of initial terrain

offset1=0 ## lift terrain up meters

landalbedo1=0.3

oceanalbedo1=0.1

landthermal1=2000

oceanthermal1=18000

- landalbedo1=0.3

- oceanalbedo1=0.1

- landthermal1=2000

- oceanthermal1=18000

def create_dem(inim1):

global exponent1

global offset1

global sealevel1

inimage2=np.copy(inim1)/np.max(inim1)

inimage2=inimage2-sealevel1

inimage2[inimage2<0]=0

dem1=np.copy(inimage2)

dem1=np.power(dem1, exponent1)

dem1=dem1*maxheight1

dem1=dem1+offset1

return(dem1)

def sabluna_netcdf(sabname, inname, outname):

sabds1= xr.open_dataset(sabname)

inds1=xr.open_dataset(inname)

outds1=sabds1

#outds1.albedo.values=np.rot90(sabds1.albedo.values)

#outds1.thermal.values=np.rot90(sabds1.thermal.values)

#outds1.zMOL.values=np.rot90(sabds1.zMOL.values)

#outds1.albedo.values=np.flipud(np.rot90(inds1.albedo.values))

#outds1.thermal.values=np.flipud(np.rot90(inds1.thermal.values))

#outds1.zMOL.values=np.flipud(np.rot90(inds1.zMOL.values))

#outds1.albedo.values=ninds1.albedo.values

#outds1.thermal.values=inds1.thermal.values

#outds1.zMOL.values=inds1.zMOL.values

outds1.albedo.values=np.flipud(inds1.albedo.values)

outds1.thermal.values=np.flipud(inds1.thermal.values)

outds1.zMOL.values=np.flipud(inds1.zMOL.values)

outds1.to_netcdf(outname, format="NETCDF3_CLASSIC")

return(0)

def earthlike_planeta_properties_by_radius(S1, radius):

Sol=1361.5

relCO2=280e-6

relO2=0.21

relN2=1-relCO2-relO2

flux=S1*Sol

mass=math.pow(radius, 3.7)

density=mass/np.power(radius,3)*5.519

vesc=np.sqrt(mass/radius)*11.186

geese=mass/(radius*radius)

geeseg=geese*9.80665

patm1=geese*mass*radius*radius ## estimation

patm2=math.pow(radius, 2.4)

gravity=geeseg

pressure=(patm1+patm2)/2

#pressure=10

#pressure=0.1

#pressure=1

pN2=pressure*relN2

pCO2=pressure*relCO2

pO2=pressure*relO2

#print(" Name ", planetname)

porbital=round(math.pow(au, 3/2),3)

print(" distance au ", au)

print(" orbital period a d ", round(porbital,4), round(porbital*365.25,4))

print(" insol S0 ",round(S1,3) )

print(" insol Wm2 ",round(flux,2) )

print(" mass ",round(mass,2) )

print(" radius ",round(radius,2) )

print(" radius km ",round((radius*6371),2) )

print(" density ",round(density,2) )

print(" vesc ",round(vesc,2) )

print(" gee_e ",round(geese,2) )

print(" gee_ms2 ",round((geese*9.81),2) )

print(" Patm ",round(pressure,6) )

return(0)

def savenetcdf2(outfilename1, outvarname1, map1):

shape1=np.shape(map1)

width1=shape1[1]

height1=shape1[0]

xoutlons1=np.linspace(-179.5,179.5,width1)

xoutlats1=np.linspace(-80.5,80.5, height1)

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#indata_set1=indata1

print(outfilename1)

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =np.flipud(map1[:])

ncout1.close()

return 0

def write_dem_mask_coastline_images_bigger(mappa):

shape1=np.shape(mappa)

height1=shape1[1]

width1=shape1[0]

imout1 = Image.fromarray(mappa)

imageio.imwrite('bmap2.png', mappa.astype(np.uint16))

#imout1.save("map1.png")

#quit(-1)

map3=np.roll(mappa, int(width1/2), axis=1)

#plt.imshow(map3)

#plt.show()

#imout2 = Image.fromarray(map3)

imageio.imwrite('bmap1.png', map3.astype(np.uint16))

mask2=np.copy(mappa)

mask3=np.copy(map3)

mask2=np.where(mask2<1,0, 65535)

mask3=np.where(mask3<1,0, 65535)

#imout3 = Image.fromarray(mask2)

#imout4 = Image.fromarray(mask3)

imageio.imwrite('bmask2.png', mask2.astype(np.uint16))

imageio.imwrite('bmask1.png', mask3.astype(np.uint16))

image1 = Image.open("bmask1.png")

image1 = image1.convert("L")

image1 = image1.filter(ImageFilter.FIND_EDGES)

image1.save("bedge1.png")

image2 = Image.open("bmask2.png")

image2 = image2.convert("L")

image2 = image2.filter(ImageFilter.FIND_EDGES)

image2.save("bedge2.png")

savenetcdf2("bmap1.nc", "z", mappa)

return(0)

def write_dem_mask_coastline_images(mappa):

shape1=np.shape(mappa)

height1=shape1[1]

width1=shape1[0]

imout1 = Image.fromarray(mappa)

imageio.imwrite('map2.png', mappa.astype(np.uint16))

#imout1.save("map1.png")

#quit(-1)

map3=np.roll(mappa, int(width1/2), axis=1)

#plt.imshow(map3)

#plt.show()

#imout2 = Image.fromarray(map3)

imageio.imwrite('map1.png', map3.astype(np.uint16))

mask2=np.copy(mappa)

mask3=np.copy(map3)

mask2=np.where(mask2<1,0, 65535)

mask3=np.where(mask3<1,0, 65535)

#imout3 = Image.fromarray(mask2)

#imout4 = Image.fromarray(mask3)

imageio.imwrite('mask2.png', mask2.astype(np.uint16))

imageio.imwrite('mask1.png', mask3.astype(np.uint16))

image1 = Image.open("mask1.png")

image1 = image1.convert("L")

image1 = image1.filter(ImageFilter.FIND_EDGES)

image1.save("edge1.png")

image2 = Image.open("mask2.png")

image2 = image2.convert("L")

image2 = image2.filter(ImageFilter.FIND_EDGES)

image2.save("edge2.png")

savenetcdf2("map1.nc", "z", mappa)

return(0)

def count_spherical_land_sea_meanz(inmap, inmask):

## NOK!!!

shape1=np.shape(inmap)

height1=shape1[1]

radlats1=np.linspace(-np.pi/2,np.pi, height1)

coslats1=np.cos(radlats1)

seas1=0

all1=0

landmeanz1=0

print(height1)

for iy in range(0,(height1)):

line1=inmap[iy,:]

line2=inmask[iy, :]

lek1=abs(coslats1[iy])

seadd0=np.count_nonzero(line1 == 0)

#print(seadd0)

seadd1=seadd0/len(line1)

seadd2=lek1*seadd1

seas1=seas1+seadd2

all1=all1+lek1

lamez0=line1[line1>0].mean()

if (math.isnan(lamez0)): lamez0=0

lamez1=lamez0*lek1

landmeanz1=landmeanz1+lamez1

landmeanz2=round((landmeanz1/height1),2)

seapercent2=round(((seas1*100)/all1),2)

landpercent2=100-seapercent2

#print(seas1)

#print(all1)

#print(seapercent2)

#print(landpercent2)

return(landpercent2, seapercent2, landmeanz2)

def calc_spatial_mean(xr_da, lon_name="longitude", lat_name="latitude"):

coslat = np.cos(np.deg2rad(xr_da[lat_name]))

return (xr_da * coslat).sum(dim=[lon_name, lat_name]) / (

coslat.sum(lat_name) * len(xr_da[lon_name])

)

def writenetcdf(filename, data_arr, varname):

shap1=np.shape(data_arr)

print(shap1)

#nlats=shap1[0]

#nlons=shap1[1]

nlats=180

nlons=360

print(nlats, nlons)

ncfile = nc.Dataset(filename,mode='w',format='NETCDF3_CLASSIC')

lat_dim = ncfile.createDimension('latitude', nlats) # latitude axis

lon_dim = ncfile.createDimension('longitude', nlons) # longitude axis

lat = ncfile.createVariable('latitude', np.float32, ('latitude',)) ## lat is latitude ?

lat.units = 'degrees_north'

lat.long_name = 'latitude'

lon = ncfile.createVariable('longitude', np.float32, ('longitude',))

lon.units = 'degrees_east'

lon.long_name = 'longitude'

#quit(-1)

data = ncfile.createVariable(varname,np.float64,('latitude','longitude')) # note: unlimited dimension is leftmost

data.units = 'None' # degrees Kelvin

data.standard_name = varname # this is a CF standard name

nlats = len(lat_dim); nlons = len(lon_dim);

lat[:] = -90. + (180./nlats)*np.arange(nlats) # south pole to north pole

lon[:] = (180./nlats)*np.arange(nlons) # Greenwich meridian eastward

#data_arr = np.random.uniform(low=280,high=360,size=(nlats,nlons))

data[:,:] = data_arr # Appends data along unlimited dimension

#print(ncfile)

ncfile.close(); print('*')

return(0)

- 33

- finame='./maps/creta.nc'

- ncin = nc.Dataset(finame)

- indem=ncin['z'][:,:]

- plt.imshow(indem)

- plt.show()

- Image.open(image).convert('L').convert('P')

- input greyscale gif, donjon map generator

inimage0=Image.open(iname1).convert('L')

inimage01=np.array(inimage0) ## big

inimage1 = skimage.transform.resize(inimage01, (nlats,nlons)) ## smaller

dem1=create_dem(inimage1)

bdem1=create_dem(inimage01)

- plt.imshow(dem1)

- plt.show()

- quit(-1)

- dem1=np.zeros((nlats, nlons))

- albedo=np.copy(dem1)

- zMOL=np.copy(dem1)

- thermal=np.copy(dem1)

dem2=np.rot90(np.copy(dem1))

mask1=np.copy(dem2)

topo=np.copy(dem2)

topo[topo<0]=0

ls = LightSource(azdeg=0,altdeg=65)

- shade data, creating an rgb array.

shade1= ls.shade(dem1,plt.cm.gray)

mask1[mask1<0]=0

mask1[mask1>0]=1

zMOL=np.copy(topo)

zMOL_data=zMOL/1000

albedo_data=np.copy(mask1)

- landalbedo1=0.3

- oceanalbedo1=0.1

- landthermal1=2000

- oceanthermal1=18000

albedo_data=np.where(albedo_data==0,oceanalbedo1,landalbedo1 )

thermal_data=np.copy(mask1)

thermal_data=np.where(thermal_data==0,oceanthermal1,landthermal1 )

zMOL=np.rot90(zMOL)

zMOL_data=np.rot90(zMOL_data)

thermal_data=np.rot90(thermal_data)

albedo_data=np.rot90(albedo_data)

zMOL=np.fliplr(zMOL)

zMOL_data=np.fliplr(zMOL_data)

thermal_data=np.fliplr(thermal_data)

albedo_data=np.fliplr(albedo_data)

- plt.imshow(zMOL_data)

- plt.show()

- quit(-1)

- albedo=0.08

- zMOL=0.0

- thermal=18000

- Luo longitude ja latitude koordinaatit

longitude = np.linspace(-179.5, 179.5, 360)

latitude = np.linspace(-89.5, 89.5, 180)

cosine_latitudes=np.cos(np.radians(latitude))

leny=len(latitude)

lenx=len(longitude)

print(leny)

landpercent1=0

meanlandheight1=0

for iy in range(0, leny):

c1=cosine_latitudes[iy]

pe0=np.count_nonzero(mask1[:,iy] ==1)

he1=np.mean(topo[:, iy])*c1

pe1=pe0/lenx

pe2=c1*pe1

landpercent1=landpercent1+pe2

meanlandheight1=meanlandheight1+he1

meanlandheight1=round(meanlandheight1/leny,1)

landpercent1=np.round( ( (100*landpercent1)/leny),2)

oceanpercent1=100-landpercent1

maxlandheight1=np.round(np.max(topo))

print(" Land % ", landpercent1)

print(" Ocean % ", oceanpercent1)

print(" Mean land height ", meanlandheight1)

print(" Max land height ", maxlandheight1)

plt.imshow(dem1, cmap="gist_earth")

plt.imshow(shade1, alpha=0.5)

plt.show()

- landp2, oceanp2, landz2=count_spherical_land_sea_meanz(topo, mask1)

write_dem_mask_coastline_images(dem1)

write_dem_mask_coastline_images_bigger(bdem1)

- print(landp2, oceanp2, landz2)

- albedo_array = xr.DataArray(

- albedo_data,

- coords={'longitude': longitude, 'latitude': latitude},

- dims=['longitude', 'latitude'],

- name='albedo'

- )

- thermal_array = xr.DataArray(

- thermal_data,

- coords={'longitude': longitude, 'latitude': latitude},

- dims=['longitude', 'latitude'],

- name='thermal'

- )

- zmol_array = xr.DataArray(

- zMOL_data,

- coords={'longitude': longitude, 'latitude': latitude},

- dims=['longitude', 'latitude'],

- name='zMOL'

- )

albedo_array = xr.DataArray(

albedo_data,

coords={'longitude': longitude, 'latitude': latitude},

dims=['latitude', 'longitude'],

name='albedo'

)

thermal_array = xr.DataArray(

thermal_data,

coords={'longitude': longitude, 'latitude': latitude},

dims=['latitude', 'longitude'],

name='thermal'

)

zmol_array = xr.DataArray(

zMOL_data,

coords={'longitude': longitude, 'latitude': latitude},

dims=['latitude', 'longitude'],

name='zMOL'

)

dataset = xr.Dataset({

'albedo': albedo_array,

'thermal': thermal_array,

'zMOL': zmol_array

})

- dataset = xr.Dataset({

- 'zMOL': zmol_array

- })

dataset.to_netcdf(inputsurfacename1, format='NETCDF3_CLASSIC')

sabluna_netcdf(original_surface_earth_name1, inputsurfacename1,outputsurfacename1 )

- sabluna_netcdf("surface00.nc", "surface00.nc", "surface_earth.nc")

S1=1/(au*au)

earthlike_planeta_properties_by_radius(S1, radius)

print(".")

Python3 map plotting

-

- plot eduplanet output climate data

-

- python3

-

- 05.07.2024 0000.0007b

-

import xarray as xr

import matplotlib.pyplot as plt

from matplotlib.colors import LightSource

import numpy as np

import math

import scipy

import skimage

from sklearn.linear_model import LinearRegression

from sklearn import svm

from sklearn.ensemble import RandomForestRegressor

from sklearn.preprocessing import LabelEncoder

from sklearn.preprocessing import StandardScaler

from sklearn.svm import SVR

from pygam import LinearGAM, s

- import cv2

from scipy.interpolate import RectBivariateSpline

import numpy as np

from scipy.interpolate import RectBivariateSpline

from scipy.interpolate import griddata

def interpolate_raster(inras, shape2, method="cubic"):

## seems nok

# Create meshgrid for sabras

#method="cubic"

shape1=np.shape(inras)

#print("shape1 ", shape1)

#quit(-1)

tanx=shape2[1]

soy=shape1[0]

sox=shape1[1]

#sablon_grid, sablat_grid = np.meshgrid(, sablats)

grid_x, grid_y = np.mgrid[0:1:360j, 0:1:360j]

#grid_x, grid_y = np.mgrid[0:1:tanx, 0:1:tany]

inx=np.linspace(0,1,sox)

iny=np.linspace(0,1,soy)

xs1=np.repeat(inx,soy).reshape(sox,soy)

xs2=np.ravel(xs1)

ys1=np.repeat(iny,sox)

ys1=np.reshape(ys1, (soy,sox))

ys1=np.rot90(ys1)

ys2=np.ravel(ys1)

points=np.dstack([xs2,ys2])

points=points[0, :, :]

boints2=(inx,iny)

values2=inras

values=inras.ravel()

#print("kkk")

#print(np.shape(values))

#print(np.shape(points))

#quit(-1)

#values=np.random(17*17)

# Flatten the grids for interpolation

#points = np.array([(lon, lat) for lon in biglons for lat in biglats])

#values = bigras.flatten()

# Perform the interpolation

#output_raster = griddata(points, values, (grid_x, grid_y), method=method)

output_r0 = griddata(points, values, (grid_y, grid_x), method=method)

#output_r0 = interpn(boints2, values2, (361,361), method=method)

#print(np.max(grid_y))

output_r1=np.rot90(output_r0)

output_r2=skimage.transform.resize(output_r1, shape2, anti_aliasing=True)

#output_raster=np.reshape(output_r2, shape2)

output_raster=np.flipud(output_r2)

return (output_raster)

def find_nearest(array, value):

"""Find the nearest value in an array."""

idx = (np.abs(array - value)).argmin()

return idx

def get_sized_raster(sabras, sablons, sablats, bigras, biglons, biglats):

"""

Generate a resized raster sabras from bigras by mapping sablons and sablats

values to the nearest biglons and biglats values in bigras.

Parameters:

sabras (np.ndarray): Template raster array to fill.

sablons (np.ndarray): Longitudes corresponding to sabras.

sablats (np.ndarray): Latitudes corresponding to sabras.

bigras (np.ndarray): Source raster array.

biglons (np.ndarray): Longitudes corresponding to bigras.

biglats (np.ndarray): Latitudes corresponding to bigras.

Returns:

np.ndarray: Resized raster with values from bigras mapped according to sablons and sablats.

"""

# Get the shape of the template raster

sab_shape = sabras.shape

# Initialize the output raster

output_raster = np.zeros(sab_shape)

for i in range(sab_shape[0]):

for j in range(sab_shape[1]):

# Get the longitude and latitude for the current sabras cell

lon = sablons[j]

lat = sablats[i]

# Find the nearest bigras indices

lon_idx = find_nearest(biglons, lon)

lat_idx = find_nearest(biglats, lat)

# Assign the value from bigras to the output raster

output_raster[i, j] = bigras[lat_idx, lon_idx]

return output_raster

def get_sized_raster000(sabras, sablons, sablats, bigras, biglons, biglats):

## nok

smallras=np.copy(sabras)*0

shape1=np.shape(sabras)

shape2=np.shape(bigras)

ny=shape2[0]

nx=shape2[1]

my=shape1[0]

mx=shape1[1]

for yi in range(0,my,1):

lat1=sablats[yi]

if(lat1<=-89): latdex=ny-1

if(lat1>=89): latdex=0

else:

#print("*")

#quit(-1)

latdex0 = np.where(biglats >= lat1)[0]

latdex=latdex0[0]

#print(lat1,latdex0)

#print(latdex)

#quit(-1)

#print(lat1, latdex)

#quit(-1)

for xi in range(0,mx,1):

lon1=sablons[xi]

if(lon1<=-179): londex=0

if(lon1>=170): londex=nx-1

else:

londex0 = np.where(biglons >= lon1)[0]

londex=londex0[0]

#londex = np.where(biglons >= lon1)[0]

#print(yi,xi, londex, latdex)

#print(lon1, lat1)

#print(latdex)

smallras[yi, xi]=bigras[latdex, londex]

print(np.shape(bigras) )

plt.imshow(smallras, cmap="coolwarm")

#plt.imshow(sabras, cmap="coolwarm")

#plt.imshow(smallras, cmap="coolwarm")

plt.show()

quit(-1)

return(smallras)

def downscale_machinelearn2(temp, inputy, slons, slats, blons, blats):

## NOK

#temp_min=np.min(temp)

#temp_max=np.max(temp)

#height_min=np.min(dem_data)

#height_max=np.max(dem_data)

bvar1=inputy[0]

avar1=inputy[1]

cvar1=inputy[2]

dvar1=inputy[3]

shape1=np.shape(temp)

shape2=np.shape(bvar1)

outnx=shape2[1]

outny=shape2[0]

#plt.imshow(y1)

temptar=get_sized_raster(bvar1, blons, blats, temp, slons, slats)

#plt.imshow(temptar)

#plt.show()

#quit(-1)

bvar0=get_sized_raster(temp, slons, slats, bvar1, blons, blats)

avar0=get_sized_raster(temp, slons, slats, avar1, blons, blats)

cvar0=get_sized_raster(temp, slons, slats, cvar1, blons, blats)

dvar0=get_sized_raster(temp, slons, slats, dvar1, blons, blats)

#temptar=skimage.transform.resize(temp, np.shape(inputy[0]) )

#bvar1=inputy[0]

#bvar0=skimage.transform.resize(inputy[0], (17,17))

#avar1=inputy[1]

#avar0=skimage.transform.resize(inputy[1], (17,17))

#cvar1=inputy[2]

#cvar0=skimage.transform.resize(inputy[2], (17,17))

#dvar1=inputy[3]

#dvar0=skimage.transform.resize(inputy[3], (17,17))

ivar1=np.dstack([bvar1, avar1])

ivar0=np.dstack([ bvar0, avar0])

#ivar1=np.dstack([dvar1, cvar1, bvar1, avar1])

#ivar0=np.dstack([dvar0, cvar0, bvar0, avar0])

ivar1=np.dstack([dvar1, bvar1, avar1])

ivar0=np.dstack([dvar0, bvar0, avar0])

#X2 = bvar1.flatten().reshape(-1, 1)

#X = bvar0.flatten().reshape(-1, 1)

#X2 = ivar1.flatten().reshape(-1, 1)

#X = ivar0.flatten().reshape(-1, 1)

X2 = ivar1.flatten().reshape(-1, 3)

X = ivar0.flatten().reshape(-1, 3)

#y = temp_interpolated.flatten()

y = temp.flatten()

scaler = StandardScaler().fit(X)

X_train_scaled = scaler.transform(X)

scaler2 = StandardScaler().fit(X2)

X2_test_scaled = scaler.transform(X2)

svr_lin = SVR(kernel = 'linear')

svr_rbf = SVR(kernel = 'rbf')

svr_poly = SVR(kernel = 'poly')

#svr_lin.fit(X_train_scaled, y)

#svr_rbf.fit(X_train_scaled, y)

#svr_poly.fit(X_train_scaled, y)

#svr_poly.fit(X, y)

#model=SVR(kernel = 'linear') ##bestis, but slow

##model=SVR(kernel = 'poly') # nok

#model=SVR(kernel = 'rbf') #3nok

#y_test_pred = svr_rbf.predict(X2_test_scaled)

#y_test_pred = svr_poly.predict(X2)

#Y2=y_test_pred

model = LinearRegression()

#model = RandomForestRegressor(n_estimators=30, random_state=0, oob_score=True)

#model = LinearGAM(s(0))

#model = LinearGAM()

model=model.fit(X, y)

Y2 = model.predict(X2)

tempds = Y2.reshape(outny, outnx)

tout=tempds

#tout=temptar

#plt.imshow(tout)

#plt.show()

#quit(-1)

return(tout )

def downscale_machinelearn_base(temp, inputy):

#from pygam import LinearGAM, s

#temp_min=np.min(temp)

#temp_max=np.max(temp)

#height_min=np.min(dem_data)

#height_max=np.max(dem_data)

temptar=skimage.transform.resize(temp, np.shape(inputy[0]) )

bvar1=inputy[0]

bvar0=skimage.transform.resize(inputy[0], (17,17))

avar1=inputy[1]

avar0=skimage.transform.resize(inputy[1], (17,17))

cvar1=inputy[2]

cvar0=skimage.transform.resize(inputy[2], (17,17))

dvar1=inputy[3]

dvar0=skimage.transform.resize(inputy[3], (17,17))

ivar1=np.dstack([bvar1, avar1])

ivar0=np.dstack([ bvar0, avar0])

#ivar1=np.dstack([dvar1, cvar1, bvar1, avar1])

#ivar0=np.dstack([dvar0, cvar0, bvar0, avar0])

ivar1=np.dstack([dvar1, bvar1, avar1])

ivar0=np.dstack([dvar0, bvar0, avar0])

#X2 = bvar1.flatten().reshape(-1, 1)

#X = bvar0.flatten().reshape(-1, 1)

#X2 = ivar1.flatten().reshape(-1, 1)

#X = ivar0.flatten().reshape(-1, 1)

X2 = ivar1.flatten().reshape(-1, 3)

X = ivar0.flatten().reshape(-1, 3)

#y = temp_interpolated.flatten()

y = temp.flatten()

scaler = StandardScaler().fit(X)

X_train_scaled = scaler.transform(X)

scaler2 = StandardScaler().fit(X2)

X2_test_scaled = scaler.transform(X2)

svr_lin = SVR(kernel = 'linear')

svr_rbf = SVR(kernel = 'rbf')

svr_poly = SVR(kernel = 'poly')

#svr_lin.fit(X_train_scaled, y)

#svr_rbf.fit(X_train_scaled, y)

#svr_poly.fit(X_train_scaled, y)

#svr_poly.fit(X, y)

#model=SVR(kernel = 'linear') ##bestis

#model=SVR(kernel = 'poly')

#model=SVR(kernel = 'rbf')

#y_test_pred = svr_rbf.predict(X2_test_scaled)

#y_test_pred = svr_poly.predict(X2)

#Y2=y_test_pred

model = LinearRegression()

#model = RandomForestRegressor(n_estimators=30, random_state=0, oob_score=True)

#model = LinearGAM(s(0))

#model = LinearGAM()

model=model.fit(X, y)

Y2 = model.predict(X2)

tempds = Y2.reshape(180, 360)

tout=tempds

#tout=temptar

return(tout )

def dstest2(lons, lats, tees, altitudes, dem, lonsb, latsb):

print(np.shape(tees))

print(np.array(lons))

print(np.array(lats))

print(np.array(altitudes))

print(lonsb)

print(latsb)

print(tees)

accutemp = np.copy(dem) * 0

shape2 = np.shape(dem)

mx2 = shape2[1]

my2 = shape2[0]

# Varmistetaan, että lons ja lats ovat nousevassa järjestyksessä

lons1 = np.array(lons)

lats1 = np.array(lats)

lons1_sorted_indices = np.argsort(lons1)

lats1_sorted_indices = np.argsort(lats1)

lons1 = lons1[lons1_sorted_indices]

lats1 = lats1[lats1_sorted_indices]

tees_sorted = tees[:, lats1_sorted_indices, :][:, :, lons1_sorted_indices]

altitudes1 = altitudes

# Luodaan bikubiset interpolointifunktiot jokaiselle korkeudelle

interpolators = []

for k in range(len(altitudes1)):

interpolator = RectBivariateSpline(lats1, lons1, tees_sorted[k, :, :])

interpolators.append(interpolator)

for iy in range(my2):

for ix in range(mx2):

lat2 = latsb[iy]

lon2 = lonsb[ix]

alt2 = dem[iy, ix]

# Etsi lähimmät korkeudet

altitudedex2 = np.searchsorted(altitudes1, alt2, side='left')

altitudedex1 = altitudedex2 - 1

# Korjataan indeksit olemaan rajojen sisällä

altitudedex1 = np.clip(altitudedex1, 0, len(altitudes1) - 2)

altitudedex2 = np.clip(altitudedex2, 1, len(altitudes1) - 1)

# Lasketaan interpolointifunktioilla lämpötilat jokaiselle korkeudelle

ta = interpolators[altitudedex1](lat2, lon2)[0, 0]

tb = interpolators[altitudedex2](lat2, lon2)[0, 0]

# Lineaarinen interpolointi korkeuden perusteella

baseadelta = altitudes1[altitudedex2] - altitudes1[altitudedex1]

adelta = alt2 - altitudes1[altitudedex1]

if baseadelta != 0:

temp = ((baseadelta - adelta) / baseadelta) * ta + (adelta / baseadelta) * tb

else:

temp = ta # Jos baseadelta on nolla (epätodennäköistä)

accutemp[iy, ix] = temp

return accutemp

def interpola_tosize(indata, shape2):

kind1='cubic'

x = np.linspace(0, 1, indata.shape[1])

y = np.linspace(0, 1, indata.shape[0])

xx, yy = np.meshgrid(x, y)

x_new = np.linspace(0, 1, shape2[1])

y_new = np.linspace(0, 1, shape2[0])

xx_new, yy_new = np.meshgrid(x_new, y_new)

outdata = scipy.interpolate.griddata((xx.ravel(), yy.ravel()), indata.ravel(),(xx_new, yy_new), method=kind1)

return(outdata)

def downscale_temperature_naive(temp1, dem2):

## constant lapse rate assumption ...

#tempcoeff1=6.5/1000

tempcoeff1=6.5/1000

size1=np.shape(temp1)

size2=np.shape(dem2)

print(size1, size2)

#dem1=skimage.transform.resize(dem2, (17,17))

#temp3=skimage.transform.resize(temp1, (180,360))

#dem3=skimage.transform.resize(dem1, (180,360))

dem1=interpola_tosize(dem2, size1)

temp3=interpola_tosize(temp1, size2)

dem3=interpola_tosize(dem1, size2)

deltat_dem3=(dem2-dem3)*tempcoeff1

#plt.imshow(deltat_dem3)

#plt.show()

tempds=temp3-deltat_dem3

inmax1=np.max(temp1)

inmin1=np.min(temp1)

indelta1=inmax1-inmin1

smax1=np.max(tempds)

smin1=np.min(tempds)

sdelta1=smax1-smin1

tempcoff1=indelta1/sdelta1

tempds2=(tempds-smin1)*tempcoff1+inmin1

tempds3=(tempds*9+tempds2*1)/10

return(tempds3 )

def interpola(indata):

kind1='cubic'

x = np.linspace(0, 1, indata.shape[1])

y = np.linspace(0, 1, indata.shape[0])

xx, yy = np.meshgrid(x, y)

x_new = np.linspace(0, 1, 360)

y_new = np.linspace(0, 1, 180)

xx_new, yy_new = np.meshgrid(x_new, y_new)

outdata = scipy.interpolate.griddata((xx.ravel(), yy.ravel()), indata.ravel(),(xx_new, yy_new), method=kind1)

return(outdata)

- 3

- main program .....

plotsize=(180,360)

iname1="./simu_103/diagfi.nc"

- iname1="./simu_103/resultat.nc"

- imapname1="./bmap1.nc"

imapname1="./map1.nc"

loca1=4500

loca2=loca1+382

- locs1=np.arange(8700,3730)

dsin1 = xr.open_dataset(iname1, decode_times=False )

dsmap1 = xr.open_dataset(imapname1, decode_times=False )

map0=dsmap1.z.values

map1=np.flipud(map0)

ls = LightSource(azdeg=0,altdeg=65)

- shade data, creating an rgb array.

shade1= ls.shade(map1,plt.cm.gray)

- print(dsin1)

- tsurf=dsin1.tsurf.values[loc1,:,:]

tass=np.array(dsin1.tsurf.values[loca1:loca2,:,:])

teess=dsin1.temp.values[loca1:loca2,:,:,:]

print (np.shape(tass))

tas=np.mean(tass, axis=0)

tees=np.mean(teess, axis=0)

print (np.shape(tas))

- plt.imshow(tas)

- plt.show()

- quit(-1)

shape2=np.shape(map1)

lats=np.array(dsin1.latitude)

lons=np.array(dsin1.longitude)

altitudes=np.array(dsin1.altitude)

lonsb=np.linspace(-179.5,179.5,360)

latsb=np.linspace(-89.5,89.5,180)

dem=map1

- demkm=np.flipud(dem)/1000

demkm=dem/1000

- smalldem=get_small_raster(tas, lons, lats,map1, lonsb, latsb)

- plt.imshow(smalldem)

- plt.show()

- quit(-1)

- latras=np.copy(tas)*0

- lonras=np.copy(tas)*0

latras=np.repeat(lats, len(lons))

latras=np.reshape(latras, np.shape(tas))

lonras=np.repeat(lons, len(lats))

lonras=np.rot90(np.reshape(lonras, np.shape(tas)))

latrasb=np.repeat(latsb, len(lonsb))

latrasb=np.flipud(np.reshape(latrasb, np.shape(map1)))

lonrasb=np.repeat(lonsb, len(latsb))

- lonrasb=np.flipud(np.rot90(np.reshape(lonrasb, np.shape(map1))))

lonrasb=np.flipud((np.reshape(lonrasb, np.shape(map1))))

coslatsb=np.cos(np.radians(latrasb))

- print (np.shape(coslatsb))

- quit(-1)

inputy=[ coslatsb, dem, latrasb, lonrasb]

- quit(-1)

- tasa=interpola_tosize(tas, np.shape(map1))-273.15

tasb=interpola_tosize(tees[0], np.shape(map1))-273.15

tasa=interpolate_raster(tas, shape2, method="cubic")

- accutas=downscale_machinelearn(tas, np.cos(np.radians(latrasb)))

- accutas=downscale_machinelearn(tas, dem)

- accutas=downscale_machinelearn(tas, np.abs(latrasb) )

- testing NOK

tashk=downscale_machinelearn2(tas, inputy, lons, lats, lonsb, latsb)

tash=tashk-273.15

tasgk=downscale_machinelearn_base(tas, inputy )

tasg=tasgk-273.15

- plt.imshow(dem)

- plt.imshow(tasa)

- plt.show()

- print("DEBUG BREAK")

- quit(-1)

- air temp

tasek=dstest2(lats,lons,tees,altitudes,demkm,lonsb,latsb)

tase=tasek-273.15

- plt.imshow(tasf)

- plt.show()

- quit(-1)

coslats=np.cos(np.radians(lats))

tasmeans1=np.mean(tas, axis=1) # seems to be ok

print(tasmeans1)

tasmeans2=tasmeans1*coslats

tasmean1=np.mean(tasmeans1)

tasmean2=round((tasmean1-273.15), 2)

shape1=np.shape(tas)

- print(shape1)

tasmax1=round((np.max(tas)-273.15),2)

tasmin1=round((np.min(tas)-273.15),2)

print(tasmean2)

print(tasmin1)

print(tasmax1)

tasc=tas-273.15

- tasd=skimage.transform.resize(tasc, plotsize)

- tasd = cv2.resize(tasc, dsize=plotsize, interpolation=cv2.INTER_CUBIC)

- tasd=interpola(tasc)

- tasd=downscale_temperature_naive(tasc, map1)

tasdk=downscale_temperature_naive(tas, map1)

tasd=tasdk-273.15

plotvar=tasd

plotvar=tase

plotvar=tasg

plotvar=tash

plotvar=(tase+tasd+tash)/3

- plotmap=np.flipud(dem)

plotmap=np.copy(dem)

- plotmap=np.flipud(plotmap)

- plotvar=tasa ## non downscaled interpoleted

- plotvar=tasb

plotvark=plotvar+273.15

coslatsb=np.cos(np.radians(latsb))

btasmeans1=np.mean(plotvark, axis=1) # seems to be ok

print(btasmeans1)

tasmeans2=btasmeans1*coslatsb

btasmean1=np.mean(tasmeans1)-273.15

print(" DS mean temp ", round(btasmean1,2) )

- plotvar=tasg ## downscaled surf

- plt.imshow(plotmap, origin='upper', cmap="gist_earth", interpolation="none", extent=[-180,180,-90,90])

plt.imshow(plotvar, origin='upper', cmap="seismic", interpolation="none",vmin=-50, vmax=50, extent=[-180,180,-90,90])

- plt.imshow(map1)

- plt.imshow(np.exp(shade1),origin="upper", cmap="Blues",alpha=0.7,extent=[-180,180,-90,90] )

- plt.imshow(plotvar, origin="upper", cmap="Spectral_r",alpha=0.6, interpolation="none",vmin=-40, vmax=40, extent=[-180,180,-90,90])

plt.contour(plotmap, origin="upper", levels=[0], colors=["k"], lw=30, alpha=0.9, extent=[-180,180,-90,90])

cs=plt.contour(plotvar, origin="upper", levels=[-120,-100,-80,-60,-40,-30,-20,-10,0,5,10,15,20,25,30,35,40], colors=["#0f0000"], alpha=0.7, extent=[-180,180,-90,90])

plt.clabel(cs, fontsize=18)

plt.suptitle("Earth-like planet that has distance 1.03 AU", fontsize=22)

plt.title(" Mean temperature "+str(tasmean2)+" °C", fontsize=18)

plt.xticks(fontsize=18)

plt.yticks(fontsize=18)

plt.grid(linestyle=":", color="k", lw=0.2)

- plt.legend(fontsize=18)

- plt.contour(map1, levels=[5000], color="brown")

plt.show()

Simulation init settings "reglages_init.txt"

Land % 29.18

Ocean % 70.82

Mean land height 775.6

Max land height 8413.0

map1.nc

bmap1.nc

distance au 1.03

orbital period a d 1.045 381.6862

insol S0 0.943

insol Wm2 1283.34

mass 1.0

radius 1

radius km 6371

density 5.52

vesc 11.19

gee_e 1.0

gee_ms2 9.81

Patm 1.0

Simulation run settings "reglages_run.txt2

- Nombre de jours d'intégration

nday = 18250

- Periode d'appel a la physique (en pas)

iphysiq = 1

- Fréquence (en pas) de l'écriture du fichier de résultat

- -- nombre de sorties total = nday*day_step/ecritphy

- -- nombre de sorties / jour = day_step/ecritphy

ecritphy = 18

- Nombre de pas d'intégration dynamique par jour

day_step = 1

- Coefficient d'absorption IR en m2/kg

- [--> voir notations TD : kappa = k * r_X]

kappa_IR = 5.0e-5

- Flux stellaire incident instantané à 1 unité astronomique (i.e. distance Terre-Soleil)

- exemple:

- - 1366.0 W m-2 (Sol today)

- - 1024.5 W m-2 (Sol today x 0.75 = weak early Sun)

Fat1AU = 1366.0

- Coefficient d'absorption dans le VISIBLE en m2/kg

- épaisseur optique équivalente : tau_surf = kappa_VI * P_s / g

kappa_VI = 5.e-20

callgasvis = .false.

- Cycle diurne ?

- -- false : flux solaire en moyenne diurne

- -- true : flux solaire varie selon l'heure locale

diurnal = .false.

- Profondeur (en m) de la premiere couche du sous-sol

lay1_soil = 3.e-2

- -----------------

- Model water cycle

water = .true.

- Model water cloud formation

watercond = .true.

- Model water precipitation (including coagulation etc.)

waterrain = .true.

- Use simple precipitation scheme?

precip_scheme=4

- multiplicative constant in Boucher 95 precip scheme

Cboucher=0.6

- Include hydrology ?

hydrology = .true.

- active runoff ?

activerunoff = .true.

- H2O snow (and ice) albedo ?

albedosnow = 0.65

- Maximum sea ice thickness ?

maxicethick = 10.

- Freezing point of seawater (degrees C) ?

Tsaldiff = 0.0

- ------------------------------------------------------------------

- Parameters for Earth dyncore (16x16 or 32x32 grid)

- ------------------------------------------------------------------

ecritphy = 480

day_step = 240

diurnal = .true.

iphysiq = 5

- Periode pour l'appel a Matsuno (en pas)

iperiod = 5

Licensing

[edit]- You are free:

- to share – to copy, distribute and transmit the work

- to remix – to adapt the work

- Under the following conditions:

- attribution – You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

File history

Click on a date/time to view the file as it appeared at that time.

| Date/Time | Thumbnail | Dimensions | User | Comment | |

|---|---|---|---|---|---|

| current | 12:20, 5 July 2024 |  | 1,210 × 710 (520 KB) | Merikanto (talk | contribs) | Update |

| 11:29, 3 July 2024 |  | 1,565 × 780 (760 KB) | Merikanto (talk | contribs) | Update | |

| 10:47, 2 July 2024 |  | 1,357 × 665 (692 KB) | Merikanto (talk | contribs) | Update | |

| 15:34, 1 July 2024 |  | 1,292 × 680 (698 KB) | Merikanto (talk | contribs) | Update of code | |

| 10:36, 1 July 2024 |  | 1,285 × 675 (729 KB) | Merikanto (talk | contribs) | Update: attempt to downscale accurate | |

| 09:51, 1 July 2024 |  | 1,585 × 793 (1,018 KB) | Merikanto (talk | contribs) | Update of layout | |

| 08:44, 1 July 2024 |  | 1,470 × 716 (654 KB) | Merikanto (talk | contribs) | Cubic interpolation | |

| 06:12, 1 July 2024 |  | 1,359 × 655 (600 KB) | Merikanto (talk | contribs) | Uploaded own work with UploadWizard |

You cannot overwrite this file.

File usage on Commons

There are no pages that use this file.

Metadata

This file contains additional information such as Exif metadata which may have been added by the digital camera, scanner, or software program used to create or digitize it. If the file has been modified from its original state, some details such as the timestamp may not fully reflect those of the original file. The timestamp is only as accurate as the clock in the camera, and it may be completely wrong.

| Software used | |

|---|---|

| Horizontal resolution | 39.37 dpc |

| Vertical resolution | 39.37 dpc |