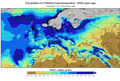

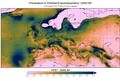

File:Chelsa trace europe ptopet 18000bp 1.png

From Wikimedia Commons, the free media repository

Jump to navigation

Jump to search

Size of this preview: 800 × 538 pixels. Other resolutions: 320 × 215 pixels | 640 × 430 pixels | 1,024 × 688 pixels.

Original file (1,024 × 688 pixels, file size: 635 KB, MIME type: image/png)

File information

Structured data

Captions

Captions

P to PET in Europe, at 20000 years ago

Summary

[edit]| DescriptionChelsa trace europe ptopet 18000bp 1.png |

English: P to PET in Europe, at 20000 years ago, Calculated from CHELSA dataset. |

| Date | |

| Source | Own work |

| Author | Merikanto |

| Camera location | 45° 00′ 00″ N, 0° 00′ 00″ E | View this and other nearby images on: OpenStreetMap |

|---|

Source of data: CHELSA

Karger, D.N., Conrad, O., Böhner, J., Kawohl, T., Kreft, H., Soria-Auza, R.W., Zimmermann, N.E., Linder, P., Kessler, M. (2017). Climatologies at high resolution for the Earth land surface areas. Scientific Data. 4 170122. https://doi.org/10.1038/sdata.2017.122 Karger D.N., Conrad, O., Böhner, J., Kawohl, T., Kreft, H., Soria-Auza, R.W., Zimmermann, N.E,, Linder, H.P., Kessler, M.. Data from: Climatologies at high resolution for the earth’s land surface areas. Dryad Digital Repository.http://dx.doi.org/doi:10.5061/dryad.kd1d4

########################################################

##

## ## python 3/windows 10

##

## chelsa climate data downloader and downscaling test program

##

## attempt to downscale current gts5 to past gts5

## ## also externel raster and rgb raster downscaler test

## # suppors only chelsa v 1.0, 1.21

##

## WARNING many datasets included, not all

## WARNING if chelsa develops, this code will deprecate fast

##

##

## 6.3.2021

## 0000.0028

##

##

##

import numpy as np

import matplotlib.pyplot as plt

import scipy

from osgeo import gdal

from osgeo import osr

from osgeo import gdalconst

import requests

import os

import glob

from numpy import dtype

import rasterio

from rasterio.plot import show

from rasterio.plot import show_hist

from rasterio.mask import mask

from rasterio.windows import Window

from mpl_toolkits.basemap import shiftgrid

from mpl_toolkits.basemap import Basemap

from shapely.geometry import box

from shapely import speedups

speedups.disable()

import geopandas as gpd

from fiona.crs import from_epsg

import pycrs

import json

import skimage.transform as st

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestRegressor

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn import svm, metrics

from pygam import LogisticGAM

from pygam import LinearGAM

from scipy.ndimage import gaussian_filter

import netCDF4

from scipy import exp, mgrid, signal, row_stack, column_stack, tile, misc

from osgeo import gdal

import sys

from struct import *

import pysolar

import pyeto

from climlab.solar.orbital import OrbitalTable

from climlab.solar.insolation import daily_insolation

import calendar

import datetime

from datetime import datetime, timezone

#import pyvips

def rm_create_dir(path1):

try:

os.rmdir(path1)

except OSError:

print(" ")

try:

os.mkdir(path1)

except OSError:

print ("Create dir %s failed" % path1)

else:

print ("Created dir %s " % path1)

return(0)

def create_dirs():

rm_create_dir("chelsa")

rm_create_dir("chelsa2")

rm_create_dir("work1")

rm_create_dir("work2")

rm_create_dir("work3")

rm_create_dir("work4")

rm_create_dir("work5")

rm_create_dir("work6")

rm_create_dir("work7")

rm_create_dir("work8")

rm_create_dir("output1")

return(0)

def mini_create_dirs():

rm_create_dir("work1")

rm_create_dir("work2")

rm_create_dir("work3")

rm_create_dir("work4")

rm_create_dir("work5")

rm_create_dir("work6")

rm_create_dir("work7")

rm_create_dir("work8")

rm_create_dir("output1")

return(0)

def save_raster_compress(outname1, rastype1, rasdata1,lon1,lat1,lon2,lat2,cols, rows):

## testef w/gtiff ok

xmin=lon1

ymin=lat1

xmax=lon2

ymax=lat2

nx=cols

ny=rows

xres = (xmax - xmin) / float(nx)

yres = (ymax - ymin) / float(ny)

geotransform = (xmin, xres, 0, ymax, 0, -yres)

dst_ds = gdal.GetDriverByName(rastype1).Create(outname1, nx, ny, 1, gdal.GDT_Float32, options=['COMPRESS=DEFLATE'])

dst_ds.SetGeoTransform(geotransform) # specify coords

srs = osr.SpatialReference() # establish encoding

srs.ImportFromEPSG(4326) # lat/long

dst_ds.SetProjection(srs.ExportToWkt()) # export coords to file

dst_ds.GetRasterBand(1).WriteArray(rasdata1) # write r-band to the raster

dst_ds.FlushCache() # write to disk

dst_ds = None

return(0)

def gaussian_blur_sub(in_array, size):

ny, nx = in_array.shape

ary = row_stack((tile(in_array[0,:], size).reshape((size, nx)),in_array,tile(in_array[-1,:], size).reshape((size, nx))))

arx = column_stack((tile(ary[:,0], size).reshape((size, ny + size*2)).T,ary,tile(ary[:,-1], size).reshape((size, ny + size*2)).T))

x, y = mgrid[-size:size+1, -size:size+1]

g = exp(-(x**2/float(size) + y**2/float(size)))

g = (g / g.sum()) #.astype(in_array.dtype)

return signal.convolve(arx, g, mode='valid')

def gaussian_blur(src1, dst1, size1):

source = gdal.Open(src1)

gt = source.GetGeoTransform()

proj = source.GetProjection()

band_array = source.GetRasterBand(1).ReadAsArray()

blurredArray = gaussian_blur_sub(band_array, size1)

#driver = gdal.GetDriverByName('GTIFF')

driver = gdal.GetDriverByName('NetCDF')

driver.Register()

cols = source.RasterXSize

rows = source.RasterYSize

bands = source.RasterCount

band = source.GetRasterBand(1)

datatype = band.DataType

output = driver.Create(dst1, cols, rows, bands, datatype)

output.SetGeoTransform(gt)

output.SetProjection(proj)

outBand = output.GetRasterBand(1)

outBand.WriteArray(blurredArray, 0, 0)

source = None # close raster

output = None

band = None

outBand = None

return(0)

def rasterize_shapefile(shpath1, outfile1, lon1, lat1, lon2, lat2, cols, rows):

outfile2=outfile1.replace("tif","nc")

tempofile1="temp1.tif"

kom1="gdal_rasterize -burn 255 -ot Float32"

kom2=" -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)

kom3=" -ts "+str(cols)+" "+str(rows)

kom4=" "+shpath1+" "+tempofile1

kommandoo1=kom1+kom2+kom3+kom4

koma2="gdal_calc.py -A "+tempofile1+ " --calc=\"(A>200)*99\" --outfile=temp2.tif"

koma3="gdal_calc.py -A temp2.tif --calc=\"(A<1)*1\" --outfile="+outfile1

#koma4="gdal_calc.py -A temp3.tif --calc=\"(A>97)*0\" --outfile=temp4.tif"

kommandoo2="gdal_translate -of netcdf -ot Float32 "+outfile1+" "+tempofile1

print (kommandoo1)

#print (kommandoo2)

os.system(kommandoo1)

os.system(koma2)

os.system(koma3)

#os.system(koma4)

#river_proximity("temp3.tif", "trivo.tif")

os.system(kommandoo2)

return(0)

def chelsa_file_name(variable1, year1,month1):

fana1="nonefile_error"

if(month1==0):

# annual

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(year1)+base2

else:

if (variable1=="bio"):

## bio, not month

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+str(month1).zfill(2)+"_"+str(year1)+base2

else:

## monthly variable

variable2=variable1

base1="CHELSA"

base2="_V1.2.1.tif"

fana1=base1+"_"+variable2+"_"+str(year1)+"_"+str(month1).zfill(2)+base2

return(fana1)

def chelsa_timeseries_name(variable1, year1,month1):

fana1="nonefile_error"

if(month1==0):

# annual

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(year1)+base2

else:

if (variable1=="bio"):

## bio, not month

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+str(month1).zfill(2)+"_"+str(year1)+base2

else:

## monthly variable

variable2=variable1

base1="CHELSA"

base2="_V1.2.1.tif"

fana1=base1+"_"+variable2+"_"+str(year1)+"_"+str(month1).zfill(2)+base2

return(fana1)

def chelsa_simu_name(simu1,variable1, year1,month1):

fana1="nonefile_error"

base1="CHELSA"+"_"+simu1

base2="_1.tif"

simu2=simu1

if(simu1=="NCAR_CCSM4"):

simu2="PMIP_CCSM4"

if(simu1=="PMIP_CCSM4"):

simu1="NCAR_CCSM4"

simu2="PMIP_CCSM4"

base3="CHELSA"+"_"+simu2

if(month1==0):

# annual

variable2=variable1

fana1=base3+"_"+variable2+"_"+str(year1)+base2

else:

if (variable1=="bio"):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/pmip3/NCAR_CCSM4/CHELSA_PMIP_CCSM4_BIO_01.tif

## bio, not month

variable2="BIO"

fana1=base3+"_"+variable2+"_"+str(month1).zfill(2)+".tif"

else:

## monthly variable

variable2=variable1

if (variable2=="prec"):

if(month1==1):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/pmip3/NCAR_CCSM4/CHELSA_PMIP_CCSM4_prec_01_1.tif

#fana1=base3+"_"+variable2+"_"+str(month1).zfill(2)+base2

fana1=base3+"_"+variable2+"_"+str(month1).zfill(2)+base2

else:

fana1=base3+"_"+variable2+"_"+str(month1)+base2

else:

fana1=base3+"_"+variable2+"_"+str(month1)+base2

return(fana1)

def chelsa_trace_name(variable1, year1,month1):

fana1="nonefile_error"

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/pr/CHELSA_TraCE21k_pr_10_-100_V1.0.tif

base1="CHELSA_TraCE21k"

base2="_V1.0.tif"

year2=int(year1/100)*-1

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/tasmax/CHELSAtrace_tasmax_7_-145_V1.0.tif

if(month1==0):

if (variable1=="orog"):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/orog/CHELSA_TraCE21k_dem_-101_V1.0.tif

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/orog/glacier_plus_dem_0_V1.2.tif

fana1="CHELSA_TraCE21k_dem_"+str(year2*-1)+"_V1.2.tif"

return(fana1)

else:

# annual

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(year2)+base2

else:

if (variable1=="bio"):

## bio, not month

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/bio/CHELSA_bio01_-100_V1.2.1.tif

##print("BIIB MAYBE NOK")

base1="CHELSA"

base2="_V1.2.1.tif"

variable2="bio"

fana1=base1+"_"+variable2+str(month1).zfill(2)+"_"+str(year2)+base2

else:

## monthly variable

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(month1)+"_"+str(year2)+base2

return(fana1)

def chelsa_cruts_name(variable1, year1,month1):

fana1="nonefile_error"

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_cruts/tmax/CHELSAcruts_tmax_10_1901_V.1.0.tif

base1="CHELSAcruts"

base2="_V.1.0.tif"

if(month1==0):

# annual NA NOK

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(year1)+base2

else:

if (variable1=="bio"):

## bio, not month NA NOK

variable2=variable1

fana1=base1+"_"+variable2+str(month1)+"_"+str(year1)+base2

else:

## monthly variable

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(month1)+"_"+str(year1)+base2

return(fana1)

def chelsa_climatologies_name(variable1, year1,month1):

fana1="nonefile_error"

base1="CHELSA"

base2="_1979-2013_V1.2_land.tif"

variable2=variable1

if(variable1=="tmean"):

variable2="temp10"

if(variable1=="tmax"):

variable2="temp10"

if(variable1=="tmin"):

variable2="temp10"

if(variable1=="prec"):

base2="_V1.2_land.tif"

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/climatologies/prec/CHELSA_prec_01_V1.2_land.tif

if(month1<1):

print("Month below 0")

return(0)

if(month1==0):

# annual NOK

variable2=variable1

fana1=base1+"_"+variable2+"_"+base2

else:

if (variable1=="bio"):

## bio, not month

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/climatologies/bio/CHELSA_bio10_12.tif

variable2="bio10"

fana1=base1+"_"+variable2+"_"+str(month1).zfill(2)+".tif"

else:

#print("CC Month")

#print(month1)

## monthly variable

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/climatologies/tmean/CHELSA_temp10_01_1979-2013_V1.2_land.tif

fana1=base1+"_"+variable2+"_"+str(month1).zfill(2)+base2

#print(fana1)

return(fana1)

def save_raster(outname1, rastype1, rasdata1,lon1,lat1,lon2,lat2,cols, rows):

## testef w/gtiff ok

xmin=lon1

ymin=lat1

xmax=lon2

ymax=lat2

nx=cols

ny=rows

xres = (xmax - xmin) / float(nx)

yres = (ymax - ymin) / float(ny)

geotransform = (xmin, xres, 0, ymax, 0, -yres)

dst_ds = gdal.GetDriverByName(rastype1).Create(outname1, nx, ny, 1, gdal.GDT_Float32, options=['COMPRESS=DEFLATE'])

dst_ds.SetGeoTransform(geotransform) # specify coords

srs = osr.SpatialReference() # establish encoding

srs.ImportFromEPSG(4326) # lat/long

dst_ds.SetProjection(srs.ExportToWkt()) # export coords to file

dst_ds.GetRasterBand(1).WriteArray(rasdata1) # write r-band to the raster

dst_ds.FlushCache() # write to disk

dst_ds = None

return(0)

def chelsa_exchelsa_name(simu1, variable1, year1,month1):

fana1="nonefile_error"

base1="CHELSA"

base2=".tif"

## note change of variables!!!

variable2=variable1

variable1=simu1

if(variable1=="pet"):

base2="_1979-2013.tif"

if(variable1=="pet"):

base2="_1979-2013.tif"

if(variable2 in("dfg", "gsl","gst", "lgd")):

base2="_V1.2.1.sdat.tif"

if(month1==0):

# annual NOK

fana1=base1+"_"+variable2+"_"+str(year1)+base2

else:

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/exchelsa/srad/CHELSA_stot_7.tif

fana1=base1+"_"+variable2+"_"+str(month1)+base2

return(fana1)

def get_chelsa_variable(repo1, simu1, variable1, year1, month1):

headers1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.80 Safari/537.36',

'Content-Type': 'text/html',

}

baseurl1="https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1"

url0=baseurl1+"/"+repo1+"/"

if(repo1=="timeseries"):

pathname1=variable1

filename1=chelsa_file_name(variable1, year1,month1)

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

if(repo1=="pmip3"):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/pmip3/NCAR_CCSM4/CHELSA_PMIP_CCSM4_tmean_7_1.tif

if(simu1=="DEM"):

url1="https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/pmip3/DEM/high_longlat.tif"

outfilename1="./chelsa/high_longlat.tif"

else:

pathname1=simu1

basename0=chelsa_simu_name(simu1,variable1, year1,month1)

filename1=basename0

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

if(repo1=="chelsa_trace"):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_trace/tasmax/CHELSAtrace_tasmax_7_-145_V1.0.tif

pathname1=variable1

filename1=chelsa_trace_name(variable1, year1,month1)

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

if(repo1=="chelsa_cruts"):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/chelsa_cruts/tmax/CHELSAcruts_tmax_10_1901_V.1.0.tif

pathname1=variable1

filename1=chelsa_cruts_name(variable1, year1,month1)

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

if(repo1=="climatologies"):

pathname1=variable1

filename1=chelsa_climatologies_name(variable1, year1,month1)

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

if(repo1=="exchelsa"):

## !! note use simu1 as folder, variable1 as variable

pathname1=simu1

filename1=chelsa_exchelsa_name(simu1, variable1, year1,month1)

url1=url0+pathname1+"/"+filename1

outfilename1="./chelsa/"+filename1

print(url1)

print(outfilename1)

r1 = requests.get(url1, headers=headers1)

if(r1==0):

printf("Chelsa loading error")

return(-1)

with open(outfilename1, "wb") as code:

code.write(r1.content)

return(0)

def get_chelsa_trace_data(year1):

for n in range(1,13):

get_chelsa_variable("chelsa_trace","", "pr",year1, n)

for n in range(1,20):

get_chelsa_variable("chelsa_trace","", "bio", year1, n)

for n in range(1,13):

get_chelsa_variable("chelsa_trace","", "tasmax", year1, n)

get_chelsa_variable("chelsa_trace","", "tasmin", year1, n)

get_chelsa_variable("chelsa_trace","", "orog", year1, 0)

return(0);

def getFeatures(gdf):

return [json.loads(gdf.to_json())['features'][0]['geometry']]

def clip_raster_to_outfile(inf1, outf1, lon1, lat1, lon2, lat2):

print("clip rout")

try:

data = rasterio.open(inf1)

except:

print("Dscaler.py: Raster file error, maybe corrupt")

return(-1)

print("Data")

print(data)

print(".. data")

bbox=box(lon1, lat1, lon2, lat2)

geo=gpd.GeoDataFrame({'geometry': bbox}, index=[0], crs=from_epsg(4326))

coords = getFeatures(geo)

#print(coords)

out_img, out_transform = mask(data, shapes=coords, crop=True)

out_meta = data.meta.copy()

#epsg_code = int(data.crs.data['init'][1:])

epsg_code=int(4326)

height=out_img.shape[1]

width=out_img.shape[2]

#print(height)

#print (width)

out_meta.update({"driver": "GTiff","height": out_img.shape[1],"width": out_img.shape[2],"transform": out_transform,"crs": pycrs.parse.from_epsg_code(epsg_code).to_proj4()})

try:

with rasterio.open(outf1, "w", **out_meta) as dest:

dest.write(out_img)

except:

print("TIF write error")

#clipped = rasterio.open(outf1)

return(0)

def get_chelsa_timeseries_variable(year1, variable1):

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/timeseries/tmean/CHELSA_tmean_1979_01_V1.2.1.tif

variable2=variable1

print("Timeseries variable ", year1,variable1)

urlbase1="https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/timeseries/"

base1="CHELSA"

base2="_V1.2.1.tif"

headers1 = {

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/46.0.2490.80 Safari/537.36',

'Content-Type': 'text/html',

}

for n in range(1,13):

geturl1=urlbase1+variable1+"/"+base1+"_"+variable2+"_"+str(year1)+"_"+str(n).zfill(2)+base2

filename1="./chelsa/"+base1+"_"+variable2+"_"+str(year1)+"_"+str(n).zfill(2)+base2

print(geturl1)

print(filename1)

r1 = requests.get(geturl1, headers=headers1)

if(r1==0):

printf("Chelsa loading error")

return(-1)

with open(filename1, "wb") as code:

code.write(r1.content)

return(0)

def chelsa_file_name_b(variable1, year1,month1):

fana1="nonefile_error"

if(month1==0):

# annual

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+"_"+str(year1)+base2

else:

if (variable1=="bio"):

## bio, not month

base1="CHELSA"

base2="_V1.2.1.tif"

variable2=variable1

fana1=base1+"_"+variable2+str(month1).zfill(2)+"_"+str(year1)+base2

else:

## monthly variable

variable2=variable1

base1="CHELSA"

base2="_V1.2.1.tif"

fana1=base1+"_"+variable2+"_"+str(year1)+"_"+str(month1).zfill(2)+base2

return(fana1)

def cut_and_scale_chelsa_variable(repo1, simu1, variable1, year1, month1, lon1, lat1, lon2, lat2, width1, height1, width2, height2):

chelsadir1="./chelsa/"

chelsadir2="./work1/"

chelsadir3="./work2/"

chelsadir4="./work3/"

chelsadir5="./work4/"

chen1=chelsa_timeseries_name(variable1, year1,month1)

if(repo1=='timeseries'):

chen1=chelsa_timeseries_name(variable1, year1,month1)

if(repo1=='trace'):

print("trace")

chen1=chelsa_trace_name(variable1, year1,month1)

inf1=chelsadir1+chen1

outf1=chelsadir2+chen1

outf2=chelsadir3+chen1

outf3=chelsadir4+chen1

outf3=outf3.replace(".tif", ".nc")

outf4=chelsadir5+chen1

outf5=outf4.replace(".tif", ".nc")

print(inf1)

print("clip")

clip_raster_to_outfile(inf1, outf1, lon1, lat1, lon2, lat2)

ds=0

print("warp")

ds = gdal.Warp(outf2, outf1, width=width1, height=height1, resampleAlg='bilinear')

ds=0

ds = gdal.Warp(outf4, outf1, width=width2, height=height2, resampleAlg='bilinear')

ds = 0

print("translate")

ds = gdal.Translate(outf3, outf2, format='NetCDF')

ds = gdal.Translate(outf5, outf4, format='NetCDF')

ds=0

return(0)

def random_forest_multiple_vars( x1, y1, x2):

print ("RF /2")

xa1=np.array(x1).astype(float)

ya1=np.array(y1).astype(float)

xa2=np.array(x2).astype(float)

#print (xa1)

# quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

#y=ya1.reshape((-1, 1))

y=ya1.ravel()

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

#degree=3

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#model=polyreg.fit(x,y)

sc = StandardScaler()

xa = sc.fit_transform(x)

xba = sc.transform(xb)

model = RandomForestRegressor(n_estimators=10, max_features=2).fit(xa,y)

y2= model.predict(xba)

return(y2)

def linear_regression_singe_var( x1, y1, x2):

#print (x1)

#print(y1)

#return(0)

#xa1=np.array(x1)

#ya1=np.array(y1)

#xa2=np.array(x2)

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

#dim2=sha1[1]

#x=xa1.reshape((-1, dim2))

#y=ya1.reshape((-1, 1))

#xb=xa2.reshape((-1, dim2))

x=xa1.reshape((-1, 1))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, 1))

#print (x)

#print (y)

#model = LinearRegression().fit(x, y)

#model = LogisticGAM().fit(x, y)

#model = LinearGAM().fit(x, y)

#model = RandomForestRegressor(n_estimators=10, max_features=2).fit(x,y)

degree=2

polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#polyreg=make_pipeline(PolynomialFeatures(degree), )

model=polyreg.fit(x,y)

## warning not xb

y2= model.predict(xb)

#print(y2)

#print("LR")

return(y2)

def linear_regression_multiple_vars( x1, y1, x2):

print ("MLR")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

degree=3

polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

model=polyreg.fit(x,y)

y2= model.predict(xb)

return(y2)

def gam_multiple_vars( x1, y1, x2):

print ("GAM")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

#print (xa1)

# quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#sc = StandardScaler()

#xa = sc.fit_transform(x)

#xba = sc.transform(xb)

#model = LogisticGAM().fit(x, y)

model = LinearGAM().fit(x, y)

y2= model.predict(xb)

return(y2)

def array2raster(newRasterfn,rasterfn,array):

raster = gdal.Open(rasterfn)

geotransform = raster.GetGeoTransform()

originX = geotransform[0]

originY = geotransform[3]

pixelWidth = geotransform[1]

pixelHeight = geotransform[5]

cols = array.shape[1]

rows = array.shape[0]

driver = gdal.GetDriverByName('GTiff')

outRaster = driver.Create(newRasterfn, cols, rows, 1, gdal.GDT_Byte)

outRaster.SetGeoTransform((originX, pixelWidth, 0, originY, 0, pixelHeight))

outband = outRaster.GetRasterBand(1)

outband.WriteArray(array)

outRasterSRS = osr.SpatialReference()

outRasterSRS.ImportFromWkt(raster.GetProjectionRef())

outRaster.SetProjection(outRasterSRS.ExportToWkt())

outband.FlushCache()

def array2nc(newRasterfn,rasterfn,array):

raster = gdal.Open(rasterfn)

geotransform = raster.GetGeoTransform()

originX = geotransform[0]

originY = geotransform[3]

pixelWidth = geotransform[1]

pixelHeight = geotransform[5]

cols = array.shape[1]

rows = array.shape[0]

driver = gdal.GetDriverByName('NetCDF')

#outRaster = driver.Create(newRasterfn, cols, rows, 1, gdal.GDT_Byte)

outRaster = driver.Create(newRasterfn, cols, rows, 1, gdal.GDT_Float32)

outRaster.SetGeoTransform((originX, pixelWidth, 0, originY, 0, pixelHeight))

outband = outRaster.GetRasterBand(1)

outband.WriteArray(array)

outRasterSRS = osr.SpatialReference()

outRasterSRS.ImportFromWkt(raster.GetProjectionRef())

outRaster.SetProjection(outRasterSRS.ExportToWkt())

outband.FlushCache()

return(0)

def get_chelsa_timeseries_bundle(year1):

for n in range(1,13):

get_chelsa_variable("timeseries","", "tmean", year1, n)

get_chelsa_variable("timeseries","", "prec", year1, n)

for n in range(1,20):

get_chelsa_variable("timeseries","", "bio", year1, n)

get_chelsa_variable("timeseries","", "gts5", year1, 0)

return(0)

def cut_chelsa_bundle(repo1, simu1, year1, month1, lon1, lat1, lon2, lat2, width1, height1, width2, height2):

#gdal.SetConfigOption('CPL_LOG', 'NUL')

global set_biovars_off

if(repo1=='timeseries'):

variable1="gts5"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, 0, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

if (repo1=='timeseries'):

for n in range(1,13):

print("Variables ", n)

variable1="tmean"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

variable1="prec"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

if (set_biovars_off==0):

for n in range(1,20):

print("Bio ", n)

variable1="bio"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

if (repo1=='trace'):

for n in range(1,13):

print("Trace variables ", n)

variable1="tasmax"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

variable1="tasmin"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

variable1="pr"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

if (set_biovars_off==0):

#for n in range(8,9):

for n in range(1,20):

print("Trace bio ", n)

variable1="bio"

cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, n, lon1, lat1, lon2, lat2, width1, height1, width2, height2)

#cut_and_scale_chelsa_variable(repo1, simu1,variable1, year1, 0, lon1, lat1, lon2, lat2, width1, height1)

return(0)

def downscale_chelsa_bundle(repo1, simu1, year1):

print("Downscale test ...")

bigs=[]

smalls=[]

#variable1="tmean"

for n in range(1,13):

#for n in range(6,8):

#for n in range(1,13,3):

print("Variables ", n)

month1=n

maxname1="./work2/"+chelsa_timeseries_name(variable1, year1,month1)

minname1="./work4/"+chelsa_timeseries_name(variable1, year1,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls.append(minna1)

bigs.append(maxa1)

#print("Target rasters ...")

month1=0

mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

month1=0

maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

apoints1=list(zip(*smalls))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs))

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

#y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1)

youtarray1=y2.reshape( bheight1, bwidth1).astype(float)

array2nc("./output1/downscaled.nc",maxtargetname1,youtarray1)

return(0)

def tiff2nc(fromraster, toraster):

raster = gdal.Open(fromraster)

geotransform = raster.GetGeoTransform()

originX = geotransform[0]

originY = geotransform[3]

pixelWidth = geotransform[1]

pixelHeight = geotransform[5]

array=raster.ReadAsArray()

cols = array.shape[1]

rows = array.shape[0]

driver = gdal.GetDriverByName('NetCDF')

outRaster = driver.Create(toraster, cols, rows, 1, gdal.GDT_Float32)

outRaster.SetGeoTransform((originX, pixelWidth, 0, originY, 0, pixelHeight))

outband = outRaster.GetRasterBand(1)

outband.WriteArray(array)

outRasterSRS = osr.SpatialReference()

outRasterSRS.ImportFromWkt(raster.GetProjectionRef())

outRaster.SetProjection(outRasterSRS.ExportToWkt())

outband.FlushCache()

return(0)

def downscale_neo(repo1, simu1, year1):

print("NEO downscale test ...")

bigs=[]

smalls=[]

#variable1="tmean"

for variable1 in ("tmean", "prec"):

print (variable1)

for n in range(1,13):

print("Variables ", n)

month1=n

maxname1="./work2/"+chelsa_timeseries_name(variable1, year1,month1)

minname1="./work4/"+chelsa_timeseries_name(variable1, year1,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls.append(minna1)

bigs.append(maxa1)

#print("Target rasters ...")

month1=0

#mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

mintargetname1="./work4/neo_ndvi.tif"

month1=0

maxtargetname1="./work2/neo_ndvi.tif"

#maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

## note warning this is data filter, very specific to dataset

## clamps to max, min values

minnatarget1 = np.where(minnatarget1 < 0, 0, minnatarget1)

maxatarget1 = np.where(maxatarget1 < 0, 0, maxatarget1)

minnatarget1 = np.where(minnatarget1 > 1, 0, minnatarget1)

maxatarget1 = np.where(maxatarget1 > 1, 0, maxatarget1)

apoints1=list(zip(*smalls))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs))

print("Downscaling ....")

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

##y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1)

youtarray1=y2.reshape( bheight1, bwidth1).astype(float)

array2nc("./output1/downscaled_neo_current.nc",maxtargetname1,youtarray1)

return(0)

def process_neo_raster(inputfile1, outputfile0, referencefile0):

outputfile1="./work2/"+outputfile0

outputfile2="./work4/"+outputfile0

referencefile1="./work2/"+referencefile0

referencefile2="./work4/"+referencefile0

raster_reso_to_reference_raster(inputfile1, referencefile1, outputfile1)

raster_reso_to_reference_raster(inputfile1, referencefile2, outputfile2)

outputnc1=outputfile1.replace(".tif", ".nc")

outputnc2=outputfile2.replace(".tif", ".nc")

tiff2nc(outputfile1, outputnc1)

tiff2nc(outputfile2, outputnc2)

return(0);

def calculate_tmean_from_trace_tasmax_tasmin(repo1, simu1, year2, lon1, lat1, lon2, lat2, width1, height1, width2, height2):

## nok

#for n in range(1,13):

for n in range(1,13):

print(n)

name1="./work2/"+chelsa_trace_name("tasmax", year2, n)

name2="./work2/"+chelsa_trace_name("tasmin", year2, n)

name3="./work2/"+chelsa_trace_name("tmean", year2, n)

name4=name3

name4=name4.replace("tif", "nc")

name5="./work4/"+chelsa_trace_name("tasmax", year2, n)

name6="./work4/"+chelsa_trace_name("tasmin", year2, n)

name7="./work4/"+chelsa_trace_name("tmean", year2, n)

name8=name7

name8=name8.replace("tif", "nc")

inimage1, lats1, lons1=load_raster_float32(name1)

inimage2, lats1, lons1=load_raster_float32(name2)

outimage1=(inimage1+inimage2)/2.0

outimage1f=np.array(outimage1).astype(float)

print(name3)

saveraster_float32(name3,"GTiff", outimage1f, lon1, lon2, lat1, lat2)

#saveraster_float32(name4,"NetCDF4", outimage1f, lon1, lon2, lat1, lat2)

jnimage1, lats1, lons1=load_raster_float32(name5)

jnimage2, lats1, lons1=load_raster_float32(name6)

joutimage1=(jnimage1+jnimage2)/2.0

joutimage1f=np.array(joutimage1).astype(float)

print(name7)

saveraster_float32(name7,"GTiff", joutimage1f, lon1, lon2, lat1, lat2)

#saveraster_float32(name8,"NetCDF4", outimage1f, lon1, lon2, lat1, lat2)

print ("DEBUG BREAK")

#quit(-1)

return(0)

def trace_tmean_from_tasmax_tasmin(slice1):

for n in range(1,13):

aoutname1="./work2/"+chelsa_trace_name("tmean", slice1,n)

ainame1="./work2/"+chelsa_trace_name("tasmax", slice1,n)

ainame2="./work2/"+chelsa_trace_name("tasmin", slice1,n)

boutname1="./work4/"+chelsa_trace_name("tmean", slice1,n)

biname1="./work4/"+chelsa_trace_name("tasmax", slice1,n)

biname2="./work4/"+chelsa_trace_name("tasmin", slice1,n)

kommand1="gdal_calc.py --calc=\"(A+B)/2\" --outfile="+aoutname1+" -A "+ainame1+" -B "+ainame2

kommand2="gdal_calc.py --calc=\"(A+B)/2\" --outfile="+boutname1+" -A "+biname1+" -B "+biname2

os.system(kommand1)

os.system(kommand2)

def downscale_timeseries_trace(outname1,repo1, repo2, simu1, year1, year2):

print("Timeseries vs trace downscale test ...")

slice1=int(year2/100)

bigs1=[]

smalls1=[]

variable1='tmean'

print("Timeseries")

if (variable1=='tmean'):

#for variable1 in ("tmean", "prec"):

#for variable1 in ("tasmax", "tasmin"):

print (variable1)

#for n in range(1,13):

for n in range(4,10):

print(" TS variables ", n)

month1=n

maxname1="./work2/"+chelsa_timeseries_name(variable1, year1,month1)

minname1="./work4/"+chelsa_timeseries_name(variable1, year1,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls1.append(minna1)

bigs1.append(maxa1)

bigs2=[]

smalls2=[]

if(variable1=='tmean'):

#for variable1 in ("tmean", "pr"):

#for variable1 in ("tasmax", "tasmin"):

print (variable1)

#for n in range(1,13):

for n in range(4,10):

print("n TRACE variables ", n)

month1=n

maxname1="./work2/"+chelsa_trace_name(variable1, year2,month1)

minname1="./work4/"+chelsa_trace_name(variable1, year2,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls2.append(minna1)

bigs2.append(maxa1)

#print("Target rasters ...")

month1=0

mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

#mintargetname1="./work4/neo_ndvi.tif"

#maxtargetname1="./work2/neo_ndvi.tif"

#mintargetname1="./work4/neo_rgb.tif"

#maxtargetname1="./work2/neo_rgb.tif"

#loadrgb(filename)

#writergb(salbuname, filename,arrays)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

## note warning this is data filter, very specific to dataset

## clamps to max, min values

#minnatarget1 = np.where(minnatarget1 < 0, 0, minnatarget1)

#maxatarget1 = np.where(maxatarget1 < 0, 0, maxatarget1)

#minnatarget1 = np.where(minnatarget1 > 1, 0, minnatarget1)

#maxatarget1 = np.where(maxatarget1 > 1, 0, maxatarget1)

apoints1=list(zip(*smalls1))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs1))

apoints2=list(zip(*smalls2))

bpoints2=minnatarget1

cpoints2=list(zip(*bigs2))

print("Downscaling ....")

## test only

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

##y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1) ## nogood

#y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1) ##ok

#y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints2) ##NOK

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints2)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray1=y2.reshape( bheight1, bwidth1).astype(float)

array2nc(outname1,maxtargetname1,youtarray1)

#array2raster("./output1/downscaled.tiff",maxtargetname1,youtarray1)

return(0)

def loadrgb(filename):

raster = gdal.Open(filename)

geotransform = raster.GetGeoTransform()

originX = geotransform[0]

originY = geotransform[3]

pixelWidth = geotransform[1]

pixelHeight = geotransform[5]

cols = array.shape[1]

rows = array.shape[0]

r=raster.ReadAsArray(1)

g=raster.ReadAsArray(2)

b=raster.ReadAsArray(3)

r1=r.astype(float)

g2=g.astype(float)

b2=b.astype(float)

rasa1=[]

rasa1.append(r1)

rasa1.append(g1)

rasa1.append(b1)

rgbpoints1=list(zip(*rasa1))

return(rgbpoints1)

# pazka

def process_rgb_raster():

inputfile1="./neo/BlueMarbleNG_2004-08-01_rgb_3600x1800.TIFF"

referencefile1="./work2/CHELSA_gts5_1993_V1.2.1.tif"

outputfile1="./work2/neo_rgb.tif"

#raster_reso_to_reference_raster(inputfile1, referencefile1, outputfile1)

referencefile2="./work4/CHELSA_gts5_1993_V1.2.1.tif"

outputfile2="./work4/neo_rgb.tif"

#raster_reso_to_reference_raster(inputfile1, referencefile2, outputfile2)

outputnc1=outputfile1.replace(".tif", ".nc")

outputnc2=outputfile2.replace(".tif", ".nc")

tiff2nc(outputfile1, outputnc1)

tiff2nc(outputfile2, outputnc2)

return(0);

def cut_and_scale_rgb_file(neofile1, neofile2,lon1, lat1, lon2, lat2, width1, height1, width2, height2):

chelsadir2="./work1/"

chelsadir3="./work2/"

chelsadir4="./work3/"

chelsadir5="./work4/"

inf1=neofile1

outf1=chelsadir2+neofile2

outf2=chelsadir3+neofile2

outf3=chelsadir4+neofile2

outf3=outf3.replace(".tif", ".nc")

outf4=chelsadir5+neofile2

outf5=outf4.replace(".tif", ".nc")

print(inf1)

clip_raster_to_outfile(inf1, outf1, lon1, lat1, lon2, lat2)

ds=0

ds = gdal.Warp(outf2, outf1, width=width1, height=height1, resampleAlg='bilinear')

ds=0

ds = gdal.Warp(outf4, outf1, width=width2, height=height2, resampleAlg='bilinear')

ds = 0

ds = gdal.Translate(outf3, outf2, format='NetCDF')

ds = gdal.Translate(outf5, outf4, format='NetCDF')

ds=0

return(0)

def rgb_downscale_timeseries_trace(repo1, repo2, simu1, year1, year2, lon1, lat1, lon2, lat2, width1, height1):

print("RGB downscale test ...")

slice1=int(year2/100)

bigs1=[]

smalls1=[]

variable1='tmean'

print("Timeseries")

#if (variable1=='tmean'):

for variable1 in ("tmean", "prec"):

print (variable1)

for n in range(1,13):

print(" TS variables ", n)

month1=n

maxname1="./work2/"+chelsa_timeseries_name(variable1, year1,month1)

minname1="./work4/"+chelsa_timeseries_name(variable1, year1,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls1.append(minna1)

bigs1.append(maxa1)

bigs2=[]

smalls2=[]

#if(variable1=='tmean'):

for variable1 in ("tmean", "pr"):

print (variable1)

for n in range(1,13):

print("n TRACE variables ", n)

month1=n

maxname1="./work2/"+chelsa_trace_name(variable1, year2,month1)

minname1="./work4/"+chelsa_trace_name(variable1, year2,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls2.append(minna1)

bigs2.append(maxa1)

#print("Target rasters ...")

month1=0

#mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

#maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

#mintargetname1="./work4/neo_ndvi.tif"

#maxtargetname1="./work2/neo_ndvi.tif"

mintargetname1="./work4/neo_rgb.tif"

maxtargetname1="./work2/neo_rgb.tif"

#loadrgb(filename)

#writergb(salbuname, filename,arrays)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

maxtarget2 = gdal.Open(maxtargetname1)

maxtargetarray2 = np.array(maxtarget1.GetRasterBand(2).ReadAsArray())

mintarget2 = gdal.Open(mintargetname1)

mintargetarray2 = np.array(mintarget2.GetRasterBand(2).ReadAsArray())

minnatarget2=mintargetarray2.flatten()

maxatarget2=maxtargetarray2.flatten()

maxtarget3 = gdal.Open(maxtargetname1)

maxtargetarray3 = np.array(maxtarget3.GetRasterBand(3).ReadAsArray())

mintarget3 = gdal.Open(mintargetname1)

mintargetarray3 = np.array(mintarget3.GetRasterBand(3).ReadAsArray())

minnatarget3=mintargetarray3.flatten()

maxatarget3=maxtargetarray3.flatten()

apoints1=list(zip(*smalls1))

cpoints1=list(zip(*bigs1))

apoints2=list(zip(*smalls2))

cpoints2=list(zip(*bigs2))

bpoints1=minnatarget1

bpoints2=minnatarget1

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray1=y2.reshape( bheight1, bwidth1).astype(float)

bpoints1=minnatarget2

bpoints2=minnatarget2

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray2=y2.reshape( bheight1, bwidth1).astype(float)

bpoints1=minnatarget3

bpoints2=minnatarget3

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray3=y2.reshape( bheight1, bwidth1).astype(float)

arrays=[]

arrays.append(youtarray1)

arrays.append(youtarray2)

arrays.append(youtarray3)

#image_size = (width1, height1)

image_size = (height1, width1)

lat = [lat1,lat2]

lon = [lon1,lon2]

r_pixels = youtarray1.astype(np.uint8)

g_pixels = youtarray2.astype(np.uint8)

b_pixels = youtarray3.astype(np.uint8)

nx = image_size[0]

ny = image_size[1]

xmin, ymin, xmax, ymax = [min(lon), min(lat), max(lon), max(lat)]

xres = (xmax - xmin) / float(nx)

yres = (ymax - ymin) / float(ny)

geotransform = (xmin, xres, 0, ymax, 0, -yres)

dst_ds = gdal.GetDriverByName('GTiff').Create('./output1/rgb.tif', ny, nx, 3, gdal.GDT_Byte)

dst_ds.SetGeoTransform(geotransform)

srs = osr.SpatialReference()

srs.ImportFromEPSG(3857)

dst_ds.SetProjection(srs.ExportToWkt())

dst_ds.GetRasterBand(1).WriteArray(r_pixels)

dst_ds.GetRasterBand(2).WriteArray(g_pixels)

dst_ds.GetRasterBand(3).WriteArray(b_pixels)

dst_ds.FlushCache()

dst_ds = None

#writergb("./work2/neo_rgb.tif", "./output/rgbtest.tif",arrays)

#array2nc("./output1/downscaled.nc",maxtargetname1,youtarray1)

#array2raster("./output1/downscaled.tiff",maxtargetname1,youtarray1)

return(0)

def calcu_daylength(dayOfYear, lat):

latInRad = np.deg2rad(lat)

declinationOfEarth = 23.45*np.sin(np.deg2rad(360.0*(283.0+dayOfYear)/365.0))

if (-np.tan(latInRad) * np.tan(np.deg2rad(declinationOfEarth)) <= -1.0):

return (24.0)

elif (-np.tan(latInRad) * np.tan(np.deg2rad(declinationOfEarth)) >= 1.0):

return (0.0)

else:

hourAngle = np.rad2deg(np.arccos(-np.tan(latInRad) * np.tan(np.deg2rad(declinationOfEarth))))

return (2.0*hourAngle/15.0)

def calcu_daylength_of_month(month, latgrida1):

dof1=month*30+15

nax=np.shape(latgrida1)[1]-1

nay=np.shape(latgrida1)[0]-1

print(nax,nay)

daylengthgrida1=latgrida1*0.0

for nny in range(0,nay):

for nnx in range(0,nax):

latt1=latgrida1[nny,nnx]

daylengthss1=calcu_daylength(dof1, latt1)

daylengthgrida1[nny,nnx]=daylengthss1

return(daylengthgrida1)

def days_in_month(month,year=2013):

return calendar.monthrange(year,month)[1]

def radiation(year, month, day, lat, lon,minute_step=30):

dayrad=0.0

for hour in range(24):

for minute in range(0,60,minute_step):

thr = hour + minute/60.

second=0

d = datetime(year, month, day, hour, minute, second, tzinfo=timezone.utc)

altitude_deg = pysolar.solar.get_altitude(lat, lon, d)

solar_rad = pysolar.solar.radiation.get_radiation_direct(d, altitude_deg)

altitude_deg= 90. - altitude_deg

solar_rad2=solar_rad*60*minute_step

dayrad=dayrad+solar_rad2

return (dayrad)

def monthly_solar_incoming_radiation(year,month,lat):

minute_step=30

days=calendar.monthrange(year,month)[1]+1

dayrad=abs(lat)*0.0

#print(dayrad)

#quit(-1)

edayrada=dayrad

for n in range(1,days):

dayrada=radiation(year, month, n, lat, 0,minute_step=30)

#print(dayrada)

dayradasum=np.sum(dayrada)

if(np.isnan(dayradasum)):

dayrada=edayrada

dayrad=dayrad+dayrada

edayrada=dayrada

return(dayrad)

def load_raster_float32(filename):

print(filename)

raster = gdal.Open(filename)

print(raster)

geotransform = raster.GetGeoTransform()

originX = geotransform[0]

originY = geotransform[3]

pixelWidth = geotransform[1]

pixelHeight = geotransform[5]

d1 = geotransform[2]

d2 = geotransform[4]

array = raster.ReadAsArray()

cols = array.shape[1]

rows = array.shape[0]

limitX=cols*pixelWidth+originX

limitY=rows*pixelHeight+originY

lons=np.array(np.arange(originX, limitX,pixelWidth))

lats=np.array(np.arange(originY, limitY,pixelHeight))

return(array, lats, lons)

def cut_and_scale_raster_file(neofile1, neofile2,lon1, lat1, lon2, lat2, width1, height1, width2, height2):

chelsadir2="./work1/"

chelsadir3="./work2/"

chelsadir4="./work3/"

chelsadir5="./work4/"

inf1=neofile1

outf1=chelsadir2+neofile2

outf2=chelsadir3+neofile2

outf3=chelsadir4+neofile2

outf3=outf3.replace(".tif", ".nc")

outf4=chelsadir5+neofile2

outf5=outf4.replace(".tif", ".nc")

print(inf1)

clip_raster_to_outfile(inf1, outf1, lon1, lat1, lon2, lat2)

ds=0

ds = gdal.Warp(outf2, outf1, width=width1, height=height1, resampleAlg='bilinear')

ds=0

ds = gdal.Warp(outf4, outf1, width=width2, height=height2, resampleAlg='bilinear')

ds = 0

ds = gdal.Translate(outf3, outf2, format='NetCDF')

ds = gdal.Translate(outf5, outf4, format='NetCDF')

ds=0

return(0)

def getFeatures(gdf):

return [json.loads(gdf.to_json())['features'][0]['geometry']]

def clip_raster_to_outfile_1(inf1, outf1, lon1, lat1, lon2, lat2):

data = rasterio.open(inf1)

bbox=box(lon1, lat1, lon2, lat2)

geo=gpd.GeoDataFrame({'geometry': bbox}, index=[0], crs=from_epsg(4326))

#geo=geo.to_crs(crs=data.crs.data)

coords = getFeatures(geo)

#print(coords)

out_img, out_transform = mask(data, shapes=coords, crop=True)

out_meta = data.meta.copy()

#epsg_code = int(data.crs.data['init'][1:])

epsg_code=int(4326)

height=out_img.shape[1]

width=out_img.shape[2]

#print(height)

#print (width)

out_meta.update({"driver": "GTiff","height": out_img.shape[1],"width": out_img.shape[2],"transform": out_transform,"crs": pycrs.parse.from_epsg_code(epsg_code).to_proj4()})

with rasterio.open(outf1, "w", **out_meta) as dest:

dest.write(out_img)

clipped = rasterio.open(outf1)

return(0)

def create_landsea(neofile1, neofile2,lon1, lat1, lon2, lat2, cols,rows):

array1, lats, lons=load_raster_float32(neofile1)

#array1=np.array(array1).astype(float)

#array2=np.maximum(array1, 0, array1)

array1[array1 < 0.0] = 0.0

array1[array1 > 0.0] = 1.0

array2=array1.astype(np.float32)

array3=np.flipud(array2)

drivername1="GTiff"

saveraster_float32(neofile2,drivername1, array3, lon1, lon2, lat1, lat2)

drivername2="NetCDF"

neofile3=neofile2.replace(".tif", ".nc")

saveraster_float32(neofile3,drivername2, array3, lon1, lon2, lat1, lat2)

return(0)

def saveraster_float32(newname,drivername, array, lon1, lon2, lat1, lat2):

#print (array)

print("Save")

#print (array.dtype)

#print(array)

cols=array.shape[1]

rows=array.shape[0]

array2=array.astype(np.float32)

pixelWidth = (lon2-lon1)/cols

pixelHeight = (lat2-lat1)/rows

print(cols, rows)

print (array2.shape)

driver = gdal.GetDriverByName(drivername)

outRaster = driver.Create(newname, cols, rows, 1, gdal.GDT_Float32, options=['COMPRESS=DEFLATE'])

outRaster.SetGeoTransform((lon1, pixelWidth, 0, lat1, 0, pixelHeight))

outband = outRaster.GetRasterBand(1)

outband.WriteArray(array2)

spatialReference = osr.SpatialReference()

#spatialReference.SetUTM(zonenum, zone >= 'N')

#spatialReference.SetWellKnownGeogCS('WGS84')

#

#retval = dataset.SetProjection(wkt)

outRasterSRS = osr.SpatialReference()

#outRasterSRS.ImportFromWkt("EPSG:4326")

spatialReference.SetWellKnownGeogCS('WGS84')

wkt = spatialReference.ExportToWkt()

outRaster.SetProjection(outRasterSRS.ExportToWkt())

outband.FlushCache()

driver=0

return(0)

def sea_proximity(srcname, dstname):

src_ds = gdal.Open(srcname)

srcband=src_ds.GetRasterBand(1)

drv = gdal.GetDriverByName('GTiff')

dst_ds = drv.Create(dstname,src_ds.RasterXSize, src_ds.RasterYSize, 1,gdal.GetDataTypeByName('Float32'))

dst_ds.SetGeoTransform( src_ds.GetGeoTransform() )

dst_ds.SetProjection( src_ds.GetProjectionRef() )

dstband = dst_ds.GetRasterBand(1)

gdal.ComputeProximity(srcband,dstband,["VALUES='0,-1'","DISTUNITS=GEO"])

srcband = None

dstband = None

src_ds = None

dst_ds = None

return(0)

#def gts(meantemps, basetemp, tempscale = 10, tempofset=273.16):

def gts(meantemps, basetemp, tempscale = 1, tempofset=0):

#rem note temperatures can be kelvins*10 c, not celcius

ndays =[31, 28, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31]

gdds=basetemp*0.0

for n in range(0,12):

print(n)

gddx=meantemps[n]

gddx=(gddx/tempscale)-tempofset

gddx=gddx-basetemp

gddx[gddx < 0.0] = 0.0

gddx=gddx * ndays[n]

gdds=gdds+gddx

gdds=np.flipud(gdds)

return(gdds)

def load_temp_stack_1(year2):

#namebase1=CHELSA_tmean_1993_10_V1.2.1

slice2=int(year2/100)

temps=[]

for n in range(1,13):

print(n)

#name1="./work2/CHELSA_tmean_1993_"+str(n).zfill(2)+"_V1.2.1.tif"

#name1="./work2/CHELSAtrace_tmean_"+str(n)+"_-"+str(slice2)+"_V1.0.tif"

name1="./work2/CHELSA_TraCE21k_tmean_"+str(n)+"_-"+str(slice2)+"_V1.0.tif"

print(name1)

temp, lons, lats=load_raster_float32(name1)

temp2=(temp*0.1)-273.16

temps.append(temp2)

return(temps, lons, lats)

def load_precip_stack_1(year2):

slice2=int(year2/100)

temps=[]

for n in range(1,13):

print(n)

#name1="./work4/CHELSA_prec_1993_"+str(n).zfill(2)+"_V1.2.1.tif"

name1="./work2/CHELSA_TraCE21k_pr_"+str(n)+"_-"+str(slice2)+"_V1.0.tif"

print(name1)

temp, lons, lats=load_raster_float32(name1)

#temp2=(temp*0.1)-273.16

temps.append(temp)

return(temps, lons, lats)

def load_evap_stack_1():

temps=[]

for n in range(1,13):

print(n)

name1="./work2/th_evapo1_"+str(n)+".nc"

#print(name1)

temp, lons, lats=load_raster_float32(name1)

#temp2=(temp*0.1)-273.16

temps.append(temp)

return(temps, lons, lats)

def koe_evaporation_thorntwaite(temperasters, latraster, lonraster):

## maybe nok

dimx, dimy=np.shape(latraster)

tmarray=np.array(temperasters)

latdegreesarray=latraster.flatten()

londegreesarray=lonraster.flatten()

latradiansarray=latdegreesarray*0.0

len1=len(latdegreesarray)

print(len1)

for n in range(0,len1):

latdegree=latdegreesarray[n]

latradiansarray[n]=pyeto.deg2rad(latdegree)

print("tmarray")

print (np.shape(tmarray))

tempix0=[]

for iy in range(0,dimy):

for ix in range(0,dimx):

tamo=[]

for mo in range(0,12):

tt=tmarray[mo,iy,ix]

tamo.append(tt)

tamos=np.array(tamo).astype(float)

tempix0.append(tamos)

tempix1=np.array(tempix0).astype(float)

tempix=(tempix1/10.0)-273.16

print(np.shape(tempix1))

#quit(-1)

#print (latradiansarray)

#quit(-1)

#daylightarray = pyeto.monthly_mean_daylight_hours(latradiansarray)

len1=len(latradiansarray)

print(len1)

#daylightarray=latradiansarray*0

daylights0=[]

for n in range(0,len1):

daylighthours=pyeto.monthly_mean_daylight_hours(latradiansarray[n])

daylights0.append(np.array(daylighthours).astype(float))

daylights=np.array(daylights0).astype(float)

evapos0=[]

for n in range(0,len1):

tempo=tempix[n]

daylo=daylights[n]

evapo1=pyeto.thornthwaite(tempo, daylo)

evapos0.append(np.array(evapo1).astype(float))

evapos=np.array(evapos0).T

print("Shape evapos")

print (np.shape(evapos))

#quit(-1)

monthevapos=[]

for m in range(0,12):

#evapotab0=evapos[0:][m]

evapotab0=evapos[m]

print (len(evapotab0))

evapotab1=evapotab0.reshape(dimy, dimx)

monthevapos.append(evapotab1)

#plt.imshow(monthevapos[7])

#plt.show()

#quit(-1)

pointer1=62000

temp1=tempix[pointer1]

lat1=latdegreesarray[pointer1]

lon1=londegreesarray[pointer1]

evapotabu1=evapos[6]

nayte_evapo1=evapotabu1[pointer1]

print ("Nayte")

print(lat1, lon1)

print (temp1)

print(nayte_evapo1)

latitude_radians1 = pyeto.deg2rad(lat1)

mean_monthly_temperature = temp1

monthly_mean_daylight = pyeto.monthly_mean_daylight_hours(latitude_radians1)

evapo1=pyeto.thornthwaite(mean_monthly_temperature, monthly_mean_daylight)

print("Algo")

print(evapo1)

return(monthevapos)

def calcu_evaporation_thornthwaite_2(year2, lon1,lat1, lon2, lat2):

## maybe ok

ndays =[31, 28, 31, 30, 31, 30, 31, 31, 30, 31, 30, 31]

#def calcu_daylength(dayOfYear, lat):

temps, lats, lons=load_temp_stack_1(year2)

longrida, latgrida = np.meshgrid(lons, lats)

termal_index1=temps[0]*0.0

for n in range(-1,12):

print(n+1)

tempi=temps[n]

temp5=tempi*0.2

indexmonth1=np.power(temp5, 1.514)

where_are_NaNs = np.isnan(indexmonth1)

indexmonth1[where_are_NaNs] = 0.0001

#indexmonth1[indexmonth1>0] = 0

termal_index1=termal_index1+indexmonth1

#plt.imshow(termal_index1)

#plt.show()

#quit(-1)

## coarse value!

#alphacoef1=0.49239+termal_index1*0.01792

alphacoef1=0.49239+termal_index1*0.01792

ak2=np.power(termal_index1,2.0)*7.71e-5

where_are_NaNs = np.isnan(ak2)

ak2[where_are_NaNs] = 0.0

ak3=6.72e-7*np.power(termal_index1,3.0)

where_are_NaNs = np.isnan(ak3)

ak3[where_are_NaNs] = 0.0

alphacoef1=alphacoef1+ak2+ak3

#plt.imshow(alphacoef1)

#plt.show()

solar_evapo=[]

for n in range(-1,12):

print(n)

#inname2="./work2/solar_"+str(n+1)+".tif"

#solar1,latas, lonas=load_raster_float32(inname2)

#evapo_solaronly= solar1* 0.0864 * 0.408/1000000*ndays[n+1]

daylengthgrida1=calcu_daylength_of_month(n, latgrida)

#print(daylengthgrida1)

alla12=daylengthgrida1/12.0

anna30=ndays[n]/30.0

anna30=1

tempa=temps[n]

tempa[tempa < 0.0] = 0.0

#print(tempa)

terma10a=(10*tempa)/termal_index1

terma10b=np.power(terma10a, alphacoef1)

#pet_th=16*alla12*anna30*terma10b

##pet_nc=16.0*terma10b

pet_nc=16*alla12*anna30*terma10b

#plt.imshow(pet_nc)

#plt.show()

#quit(-1)

evapoo1=np.array(pet_nc).astype(float)

outname1="./work2/th_evapo1_"+str(n+1)+".nc"

evapoo1=np.flipud(evapoo1)

saveraster_float32(outname1,"NetCDF", evapoo1, lon1, lon2, lat1, lat2)

return(solar_evapo)

def river_proximity(srcname, dstname):

src_ds = gdal.Open(srcname)

srcband=src_ds.GetRasterBand(1)

## jn waruning test code

#srcarray=src_ds.ReadAsArray()

#srcarray=srcarray*0

#srcband.WriteArray(srcarray)

drv = gdal.GetDriverByName('GTiff')

dst_ds = drv.Create(dstname,src_ds.RasterXSize, src_ds.RasterYSize, 1,gdal.GetDataTypeByName('Float32'))

dst_ds.SetGeoTransform( src_ds.GetGeoTransform() )

dst_ds.SetProjection( src_ds.GetProjectionRef() )

dstband = dst_ds.GetRasterBand(1)

gdal.ComputeProximity(srcband,dstband,["VALUES='0,-1'","DISTUNITS=GEO"])

srcband = None

dstband = None

src_ds = None

dst_ds = None

return(0)

def downscale_timeseries_trace_neo(outname1,targetname1, simu1, year1, year2):

print("Timeseries vs trace downscale test ...")

slice1=int(year2/100)

bigs1=[]

smalls1=[]

variable1='tmean'

print("Timeseries")

#if (variable1=='tmean'):

for variable1 in ("tmean", "prec"):

#for variable1 in ("tasmax", "tasmin"):

print (variable1)

for n in range(1,13):

#for n in range(4,10):

print(" TS variables ", n)

month1=n

maxname1="./work2/"+chelsa_timeseries_name(variable1, year1,month1)

minname1="./work4/"+chelsa_timeseries_name(variable1, year1,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls1.append(minna1)

bigs1.append(maxa1)

bigs2=[]

smalls2=[]

#if(variable1=='tmean'):

for variable1 in ("tmean", "pr"):

#for variable1 in ("tasmax", "tasmin"):

print (variable1)

for n in range(1,13):

#for n in range(4,10):

print("n TRACE variables ", n)

month1=n

maxname1="./work2/"+chelsa_trace_name(variable1, year2,month1)

minname1="./work4/"+chelsa_trace_name(variable1, year2,month1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

#print (np.shape(maxarray1))

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls2.append(minna1)

bigs2.append(maxa1)

#print("Target rasters ...")

month1=0

#mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

#maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

mintargetname1="./work4/"+targetname1

maxtargetname1="./work2/"+targetname1

#loadrgb(filename)

#writergb(salbuname, filename,arrays)

print(maxtargetname1)

print(mintargetname1)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

## note warning this is data filter, very specific to dataset

## clamps to max, min values

flatten_to=1

flatten_upper_limit=1.0

flatten_lower_limit=0.0

if(flatten_to==1):

minnatarget1 = np.where(minnatarget1 < flatten_lower_limit, flatten_lower_limit, minnatarget1)

maxatarget1 = np.where(maxatarget1 < flatten_lower_limit, flatten_lower_limit, maxatarget1)

minnatarget1 = np.where(minnatarget1 > flatten_upper_limit, flatten_upper_limit, minnatarget1)

maxatarget1 = np.where(maxatarget1 > flatten_upper_limit, flatten_upper_limit, maxatarget1)

apoints1=list(zip(*smalls1))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs1))

apoints2=list(zip(*smalls2))

bpoints2=minnatarget1

cpoints2=list(zip(*bigs2))

print("Downscaling ....")

## test only

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

##y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1) ## nogood

#y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1) ##ok

#y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints2) ##NOK

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints2)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray1=y2.reshape( bheight1, bwidth1).astype(float)

array2nc(outname1,maxtargetname1,youtarray1)

#array2raster("./output1/downscaled.tiff",maxtargetname1,youtarray1)

return(0)

def raster_reso_to_reference_raster(inputfile, referencefile, outputfile):

print(inputfile, referencefile, outputfile)

input = gdal.Open(inputfile, gdalconst.GA_ReadOnly)

inputProj = input.GetProjection()

inputTrans = input.GetGeoTransform()

reference = gdal.Open(referencefile)

referenceProj = reference.GetProjection()

referenceTrans = reference.GetGeoTransform()

bandreference = reference.GetRasterBand(1)

x = reference.RasterXSize

y = reference.RasterYSize

driver= gdal.GetDriverByName('GTiff')

output = driver.Create(outputfile,x,y,1,bandreference.DataType)

output.SetGeoTransform(referenceTrans)

output.SetProjection(referenceProj)

gdal.ReprojectImage(input,output,inputProj,referenceProj,gdalconst.GRA_Bilinear)

del output

return(0)

def downscale_own_dirs(outname1,maxtargetname1, mintargetname1, dira1, dira2, dirb1, dirb2):

print(" File against own files test ...")

bigs1=[]

smalls1=[]

for ff in os.listdir(dira1):

maxname1=dira1+"/"+ff

minname1=dira2+"/"+ff

print(minname1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls1.append(minna1)

bigs1.append(maxa1)

bigs2=[]

smalls2=[]

print("")

print("KKKKKKK")

for ff in os.listdir(dirb1):

print(ff)

maxname1=dirb1+"/"+ff

minname1=dirb2+"/"+ff

print(minname1)

max1 = gdal.Open(maxname1)

maxarray1 = np.array(max1.GetRasterBand(1).ReadAsArray())

min1 = gdal.Open(minname1)

minarray1 = np.array(min1.GetRasterBand(1).ReadAsArray())

minna1=minarray1.flatten()

maxa1=maxarray1.flatten()

bheight1=np.shape(maxarray1)[0]

bwidth1=np.shape(maxarray1)[1]

bheight2=np.shape(minarray1)[0]

bwidth2=np.shape(minarray1)[1]

smalls2.append(minna1)

bigs2.append(maxa1)

#print("Target rasters ...")

month1=0

#mintargetname1="./work4/"+chelsa_timeseries_name("gts5", year1,month1)

#maxtargetname1="./work2/"+chelsa_timeseries_name("gts5", year1,month1)

#loadrgb(filename)

#writergb(salbuname, filename,arrays)

maxtarget1 = gdal.Open(maxtargetname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray())

mintarget1 = gdal.Open(mintargetname1)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray())

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

## note warning this is data filter, very specific to dataset

## clamps to max, min values

flatten_to_01=1

if(flatten_to_01==1):

minnatarget1 = np.where(minnatarget1 < 0, 0, minnatarget1)

maxatarget1 = np.where(maxatarget1 < 0, 0, maxatarget1)

minnatarget1 = np.where(minnatarget1 > 1, 0, minnatarget1)

maxatarget1 = np.where(maxatarget1 > 1, 0, maxatarget1)

apoints1=list(zip(*smalls1))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs1))

apoints2=list(zip(*smalls2))

bpoints2=minnatarget1

cpoints2=list(zip(*bigs2))

print("Downscaling ....")

## test only

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

##y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1) ## nogood

#y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1) ##ok

#y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints2) ##NOK

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints2)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

youtarray1=y2.reshape( rows, cols).astype(float)

save_points_to_netcdf(outfilename1, "var", lons1, lats1, youtarray1)

#array2nc(outname1,maxtargetname1,youtarray1)

#array2raster("./output1/downscaled.tiff",maxtargetname1,youtarray1)

return(0)

def process_my_own_rasters(rasdir1, rasdir2, referenceneo1):

for ff in os.listdir(rasdir1):

fina1=rasdir1+"/"+ff

fina2=rasdir2+"/"+ff

print (fina1)

print (fina2)

print (referenceneo1)

raster_reso_to_reference_raster(fina1, referenceneo1, fina2)

return(0)

def gdaldem_test1(finktio1, outformat1, infile1, outfile1, par1, par2, par3=0):

k1="gdaldem "

k2=finktio1+" "

k3="-of "+outformat1+" "

kommando1=k1+k2

if(finktio1=="hillshade"):

p1="-az "+str(par1)+ " "

p2="-alt "+str(par2)+" "

kommando1=kommando1+p1+p2+infile1+" "+outfile1

print(kommando1)

#return(0)

os.system(kommando1)

return(0)

def get_chelsa_pmip3_data(simu1):

print("Get Chalsa PMIP3 data ", simu1)

for n in range(1,13):

get_chelsa_variable("pmip3",simu1, "prec",0, n)

for n in range(1,13):

get_chelsa_variable("pmip3",simu1, "tmean", 0, n)

for n in range(1,20):

get_chelsa_variable("pmip3",simu1, "bio", 0, n)

get_chelsa_variable("pmip3","DEM", 0,0, 0)

return(0);

def get_chelsa_climatologies_data():

print("Get Chelsa climatologies data ")

for n in range(1,13):

get_chelsa_variable("climatologies","", "tmean", 0, n)

for n in range(1,20):

get_chelsa_variable("climatologies","", "bio", 0, n)

for n in range(1,13):

get_chelsa_variable("climatologies","", "prec",0, n)

#https://os.zhdk.cloud.switch.ch/envicloud/chelsa/chelsa_V1/climatologies/prec/CHELSA_prec_01_V1.2_land.tif

return(0);

def get_chelsa_cruts_data(year1):

for n in range(1,13):

get_chelsa_variable("chelsa_cruts","", "prec", year1, n)

for n in range(1,13):

get_chelsa_variable("chelsa_cruts","", "tmax", year1, n)

for n in range(1,13):

get_chelsa_variable("chelsa_cruts","", "tmin", year1, n)

for n in range(1,20):

get_chelsa_variable("chelsa_cruts","", "bio", year1, n)

return(0)

def cut_chelsa_dir(chelsadira1, lon1, lat1, lon2, lat2, width1, height1, width2, height2):

global set_biovars_off

cutdir1="./work2/"

cutdir2="./work4/"

chelsadir2="./work1/"

chelsadir3="./work2/"

chelsadir4="./work3/"

chelsadir5="./work4/"

for ff in os.listdir(chelsadira1):

bobo=0

if(set_biovars_off==1):

if 'bio' in ff:

bobo=1

if(set_biovars_off==1):

if 'BIO' in ff:

bobo=1

if(bobo==0):

fina1=chelsadira1+"/"+ff

tana1=cutdir1+"/"+ff

tana1=cutdir1+"/"+ff

inf1=fina1

outf1=chelsadir2+ff

outf2=chelsadir3+ff

outf3=chelsadir4+ff

outf3=outf3.replace(".tif", ".nc")

outf4=chelsadir5+ff

outf5=outf4.replace(".tif", ".nc")

print(inf1)

clip_raster_to_outfile(inf1, outf1, lon1, lat1, lon2, lat2)

ds=0

ds = gdal.Warp(outf2, outf1, width=width1, height=height1, resampleAlg='bilinear')

ds=0

ds = gdal.Warp(outf4, outf1, width=width2, height=height2, resampleAlg='bilinear')

ds = 0

ds = gdal.Translate(outf3, outf2, format='NetCDF')

ds = gdal.Translate(outf5, outf4, format='NetCDF')

ds=0

return(0)

def listauz(dire1, patterni1):

listaa1 = glob.glob(dire1+"/"+patterni1)

return(listaa1)

def loadraster_stak(direktory1, pattern1, pattern2):

## raster stack

rasta=[]

for ff in os.listdir(direktory1):

fina1=direktory1+"/"+ff

if pattern2 in ff:

if pattern1 in ff:

print(ff)

ras, lonk, latt=load_raster_float32(fina1)

#ras2=np.array(ras).flatten().astype(float)

rasta.append(ras)

return(rasta, lonk, latt)

def loadraster_stak_arrays(direktory1, pattern1, pattern2):

## stack of raster 1d array for downscaling

rasta=[]

for ff in os.listdir(direktory1):

fina1=direktory1+"/"+ff

if pattern2 in ff:

if pattern1 in ff:

print(ff)

ras, lonk, latt=load_raster_float32(fina1)

ras2=np.array(ras).flatten().astype(float)

rasta.append(ras2)

return(rasta, lonk, latt)

def downscale_vars_directly(inname1, inname2, outname1, varname1, varname2,lon1, lon2, lat1, lat2):

workdir1="./work2"

workdir2="./work4"

bigs1, lo, la=loadraster_stak_arrays(workdir1, varname1,".tif")

bigs2, lo, la=loadraster_stak_arrays(workdir1, varname2, ".tif")

smalls1, lo2, la2=loadraster_stak_arrays(workdir2, varname1,".tif" )

smalls2, lo2, la2=loadraster_stak_arrays(workdir2, varname2,".tif" )

#warinh kelvin to celcius

for n in range(0,12):

smalls1[n]=smalls2[n]*0.1-273.16

bigs2[n]=bigs2[n]*0.1-273.16

print (len(bigs1))

print (len(bigs2))

print (len(smalls1))

print (len(smalls2))

print(len(bigs1[1]))

print(len(bigs2[1]))

print(len(smalls1[1]))

print(len(smalls2[1]))

#quit(-1)

#q1=bigs1[0]

#print(q1.shape())

maxtarget1 = gdal.Open(inname1)

maxtargetarray1 = np.array(maxtarget1.GetRasterBand(1).ReadAsArray()).astype(float)

cols = int(maxtargetarray1.shape[1])

rows = int(maxtargetarray1.shape[0])

mintarget1 = gdal.Open(inname2)

mintargetarray1 = np.array(mintarget1.GetRasterBand(1).ReadAsArray()).astype(float)

minnatarget1=mintargetarray1.flatten()

maxatarget1=maxtargetarray1.flatten()

#plt.imshow(maxtargetarray1)

#plt.show()

print(cols, rows)

#quit(-1)

#print (len(maxtargetarray1) )

#print(len(mintargetarray1) )

print(len(maxatarget1) )

print(len(minnatarget1) )

#print(varname1, varname2)

#print(inname1)

#print(inname2)

## note warning this is data filter, very specific to dataset

## clamps to max, min values

flatten_to=1

flatten_upper_limit=100.0

flatten_lower_limit=0.0

if(flatten_to==1):

minnatarget1 = np.where(minnatarget1 < flatten_lower_limit, flatten_lower_limit, minnatarget1)

maxatarget1 = np.where(maxatarget1 < flatten_lower_limit, flatten_lower_limit, maxatarget1)

#minnatarget1 = np.where(minnatarget1 > flatten_upper_limit, flatten_upper_limit, minnatarget1)

#maxatarget1 = np.where(maxatarget1 > flatten_upper_limit, flatten_upper_limit, maxatarget1)

#midas1=minnatarget1.reshape(100,300)

#plt.imshow(midas1)

#plt.show()

#quit(-1)

apoints1=list(zip(*smalls1))

bpoints1=minnatarget1

cpoints1=list(zip(*bigs1))

apoints2=list(zip(*smalls2))

bpoints2=minnatarget1

cpoints2=list(zip(*bigs2))

print("Downscaling ....")

## test only

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints1)

##y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints1) ## nogood

#y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints1) ##ok

#y2=linear_regression_multiple_vars(apoints1, bpoints1, cpoints2) ##NOK

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints2)

#y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

#print(np.shape(apoints1[0]))

#print(np.shape(bpoints1))

#print(np.shape(cpoints1[0]))

print(bpoints1)

y2=random_forest_multiple_vars(apoints1, bpoints1, cpoints2) ##ok

#y2=gam_multiple_vars(apoints1, bpoints1, cpoints2)

yo1=y2.reshape( rows, cols)

yo2=np.flipud(yo1)

print(yo1)

#plt.imshow(yo1)

#plt.show()