File:300 ma atmosphere near surface temp mean 1.png

Original file (2,192 × 1,472 pixels, file size: 284 KB, MIME type: image/png)

Captions

Captions

Summary

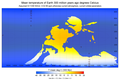

[edit]| Description300 ma atmosphere near surface temp mean 1.png |

English: Mean temperature of air near surface, Late Pennsylvanian ice age, ca 300 million years ago.

Assumed CO2 80 ppm, otherwise current O2 etc, solar constant S=1330, current orbital parameters. |

| Date | |

| Source | Own work |

| Author | Merikanto |

This image is based simulation output from ccgenie muffins simulator, own experiment.

https://www.seao2.info/mymuffin.html https://github.com/derpycode/cgenie.muffin

Landsea information is from

PaleoDEM Resource – Scotese and Wright (2018)

11 August, 2018 by Sabin Zahirovic

https://www.earthbyte.org/paleodem-resource-scotese-and-wright-2018/

http://www.earthbyte.org/webdav/ftp/Data_Collections/Scotese_Wright_2018_PaleoDEM/Scotese_Wright_2018_Maps_1-88_1degX1deg_PaleoDEMS_nc.zip https://www.earthbyte.org/paleodem-resource-scotese-and-wright-2018/

Dem process to mask

- process dem file to mask

- and flatten sea

library(raster)

library(ncdf4)

library(rgdal)

library(png)

- file1="./indata1/Map21_PALEOMAP_1deg_Mid-Cretaceous_90Ma.nc"

- file1='./indata1/Map49_PALEOMAP_1deg_Permo-Triassic Boundary_250Ma.nc'

- file1="./indata1/sand.nc"

file1="./indata1/Map57_PALEOMAP_1deg_Late_Pennsylvanian_300Ma.nc"

file2="dem.nc"

file3="dem.tif"

- maskname1= "pennsylvanian_permo_300_ma_mask.png"

- demname1= "pennsylvanian_permo_300_ma_dem.png"

- maskname1= "cretaceous_90_ma_mask.png"

- demname1= "cretaceous_90_ma_dem.png"

maskname1= "300ma_mask.png"

demname1= "300ma_dem.png"

ur1<-raster(file1)

ur1[ur1[]<1] <- 0

- image(ur1)

- plot(ur1)

lonr1 <- init(ur1, 'x')

latr1 <- init(ur1, 'y')

crs(ur1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(ur1, file2, overwrite=TRUE, format="CDF", varname="Band1", varunit="m",

longname="Band1", xname="lon", yname="lat")

writeRaster(ur1, file3, overwrite=TRUE, format="GTiff", varname="Band1", varunit="m",

longname="Band1", xname="lon", yname="lat")

crs(lonr1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

crs(latr1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(lonr1, "lons.nc", overwrite=TRUE, format="CDF", varname="Band1", varunit="deg",

longname="Band1", xname="lon", yname="lat")

writeRaster(latr1, "lats.nc", overwrite=TRUE, format="CDF", varname="Band1", varunit="deg",

longname="Band1", xname="lon", yname="lat")

- jn warning flip!

- rdem1=flip(ur1)

- r=flip(ur1)

rdem1=ur1

r=ur1

mini=minValue(rdem1)

maxi=maxValue(rdem1)

delta=maxi-mini

rdem2=(rdem1/delta)*255

dims<-dim(r)

dims

r[r[]<1] <- 0

r[r[]>0] <- 1

image(r)

- stop(-1)

print (dims[1])

print (dims[2])

rows=dims[2]

cols=dims[1]

- stop(-1)

mask0<-r

mask1<-mask0[]

idem1<-rdem2[]

mask2<-matrix(mask1, ncol=cols, nrow=rows )

idem2<-matrix(idem1, ncol=cols, nrow=rows )

mask3<-t(mask2)

idem3<-t(idem2)

r <- writePNG(mask3, maskname1)

r <- writePNG(idem3, demname1)

plot(r)

- png('mask.png', height=nrow(r), width=ncol(r))

- plot(r, maxpixels=ncell(r))

- image(r, axes = FALSE, labels=FALSE)

- dev.off()

ccgenie muffins output pp

-

- ccgenie muffins 2022.01.05 output netcdf post-process

- capture slices, maybe annual mean

-

- 0000.0001 5.1.2022

library(raster)

library(ncdf4)

library(rgdal)

get_genie_nc_variable_2d<-function(filename1, variable1, start1, count1)

{

nc1=nc_open(filename1)

#ncdata0 <- ncvar_get( nc1, variable1, start=c(1,1, start1), count=c(36,36,count1) )

ncdata0 <- ncvar_get( nc1, variable1, start=c(1,1, start1), count=c(36,36,count1) )

#print(ncdata0)

#print(dim(ncdata0))

lons1<- ncvar_get( nc1, "lon")

lats1<- ncvar_get( nc1, "lat")

nc_close(nc1)

return(ncdata0)

}

monthlymean<-function(daata)

{

daataa=rowSums(daata, dims=2)/12

return(daataa)

}

save_t21_ast_georaster_nc<-function(outname1,daataa, outvar1, longvar1, unit1)

{

## note warning: mayne not accurate!

ext2<-c(-180, 180, -90,90)

daataa2<-t(daataa)

r0 <- raster(daataa2)

r<-flip(r0)

extent(r) <- ext2

crs(r)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(r, outname1, overwrite=TRUE, format="CDF", varname=outvar1, varunit=unit1,

longname=longvar1, xname="lon", yname="lat")

}

capture_mean_variable<-function(infile1, outfile1, start1, count1, variable1, unit1, ofset1, coef1)

{

udata00<-get_genie_nc_variable_2d(file1, variable1, start1, count1)

udata01=monthlymean(udata00)

udata02=((udata01+ofset1)*coef1)

#image(udata02)

mean1=mean(udata02)

print(mean1)

outname1=outfile1

outvar1=variable1

longvar1=variable1

unit1=unit1

save_t21_ast_georaster_nc(outname1,udata02, outvar1, longvar1, unit1)

}

capture_slice_variable<-function(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

{

udata01<-get_genie_nc_variable_2d(file1, variable1, start1, 1)

#udata01=monthlymean(udata00)

udata02=((udata01+ofset1)*coef1)

#image(udata02)

mean1=mean(udata02)

print(mean1)

outname1=outfile1

outvar1=variable1

longvar1=variable1

unit1=unit1

save_t21_ast_georaster_nc(outname1,udata02, outvar1, longvar1, unit1)

}

- file1="E:/lautta_cgenie/300ma_cos_120_sl1330_2/fields_biogem_2d.nc"

- file2="E:/lautta_cgenie/300ma_cos_120_sl1330_2/fields_biogem_3d.nc"

- file1="E:/lautta_cgenie/300ma_co2_50_sol1330_2/fields_biogem_2d.nc"

- file2="E:/lautta_cgenie/300ma_co2_50_sol1330_2/fields_biogem_3d.nc"

- file1="E:/lautta_cgenie/300mya_co2_80_solar_1330/fields_biogem_2d.nc"

- file2="E:/lautta_cgenie/300mya_co2_80_solar_1330/fields_biogem_3d.nc"

file1="E:/varasto_simutulos_2/300ma_co2_100_sol1340_orb_21k_10k/fields_biogem_2d.nc"

file2="E:/varasto_simutulos_2/300ma_co2_100_sol1340_orb_21k_10k/fields_biogem_3d.nc"

- E:\varasto_simutulos_2\300ma_co2_100_sol1340_orb_21k_10k

variable1="atm_temp"

start1=10

count1=1

outfile1="atm_temp.nc"

unit1="C"

ofset1=0

coef1=1

capture_slice_variable(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

variable1="phys_seaice"

start1=10

count1=1

outfile1="phys_seaice.nc"

unit1="fraction"

ofset1=0

coef1=1

capture_slice_variable(infile1, outfile1, start1, variable1, unit1, ofset1, coef1)

- stop(-1)

Downscale w/Python 3

-

- netcdf downscaler

- also habitat test

- python 3,GDAL

-

- v 0012.0004

- 07.01.2022

-

import numpy as np

import scipy

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

from matplotlib import cm

from colorspacious import cspace_converter

from collections import OrderedDict

- import colormaps as cmaps

- import cmaps

import netCDF4 as nc

import os

from scipy.interpolate import Rbf

from sklearn.linear_model import LinearRegression

from sklearn.preprocessing import PolynomialFeatures

from sklearn.pipeline import make_pipeline

from sklearn.ensemble import RandomForestRegressor

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from sklearn import svm, metrics

from pygam import LogisticGAM

from pygam import LinearGAM

import pandas as pd

import array as arr

import scipy.stats

- if basemap is available, we'll use it.

- otherwise, we'll improvise later...

try:

from mpl_toolkits.basemap import Basemap

basemap = True

except ImportError:

basemap = False

- control vars

- random fotest

RF_estimators1=10

RF_features1=2

- downscaler

DS_method=0 ## default random forest

DS_show=1 ## view downscaled

DS_input_log=0 ## convert "b" var to log during downscaling

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

def probability_single_var( x1, y1, x2):

print ("Specie habitation test")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=1

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#y=y*0.0+1

y=x

#print(x)

#print(y)

x_mean=np.mean(x)

x_std=np.std(x)

x_cover_std=(x-x_mean)/x_std

y_mean=np.mean(y)

y_std=np.std(y)

y_cover_std=(y-y_mean)/y_std

kohde=abs(x-x_mean)/x_std

#print (kohde)

#print (x-x_mean)

#quit()

#plt.plot(x_cover_std)

#plt.show()

#model = LinearRegression().fit(x, kohde)

#degree=3

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#model=polyreg.fit(x,kohde)

sc = StandardScaler()

xa = sc.fit_transform(x)

xba = sc.transform(xb)

#model = RandomForestRegressor(n_estimators=10, max_features=2).fit(xa,kohde)

#model = LogisticGAM().fit(x, kohde)

model = LinearGAM().fit(x, kohde)

y2= model.predict(xb)

#svm1 = svm.OneClassSVM(nu=0.1, kernel="rbf", gamma=0.5)

#svm1 = svm.OneClassSVM(nu=0.1, kernel="rbf", gamma=0.5)

#model=svm1.fit(x,y)

#model=svm1.fit(x,kohde)

#y2= model.predict(xb)

y2_mean=np.mean(y2)

y2_std=np.std(y2)

y2_cover_std=(y2-y2_mean)/y2_std

y3=y2_cover_std

#y5=scipy.stats.norm.cdf(y3,y2_mean,y2_std)

y4=scipy.stats.norm.sf(y3,y2_mean,y2_std)

ymin=np.min(y4)

deltamax=np.max(y4)-np.min(y4)

y5=((y4-ymin)/deltamax)

#zgrid1=np.array(zoutvalue1).reshape(1000, 400)

#plt.plot(y5)

#cb = plt.scatter(np.arange(1,1000), np.arange(1,400), s=60, c=zoutvalue1)

#plt.show()

#print(np.shape(y5[0]))

#stop(-1)

return(y5)

def load_xy(infname1, lonname1, latname1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

indf1=pd.read_csv(infname1, sep=';')

#print(indf1)

templons1=indf1[lonname1]

templats1=indf1[latname1]

cache_lons1=np.array(templons1)

cache_lats1=np.array(templats1)

#print (cache_lons1)

#print (cache_lats1)

return(0)

def preprocess_big_raster(infilename1, invarname1, sabluname1, outfilename1, soutfilename1, outvarname1,lon1, lon2, lat1, lat2, width1, height1, roto):

gdal_cut_resize_fromnc_tonc(infilename1, sabluname1, outfilename1, invarname1,lon1, lon2, lat1, lat2)

gdal_cut_resize_tonc(infilename1,soutfilename1 ,lon1, lon2, lat1, lat2, width1, height1)

return(0)

def preprocess_small_single_raster(infilename1, invarname1, outfilename1, outvarname1,lon1, lon2, lat1, lat2, roto):

print(infilename1)

tempfilename1="./process/temp00.nc"

tempfilename2="./process/temp01.nc"

loadnetcdf_single_tofile(infilename1, invarname1, tempfilename1, outvarname1)

#rotate_netcdf_360_to_180(tempfilename1, outvarname1,tempfilename2, outvarname1)

gdal_cut_tonc(tempfilename1,outfilename1 ,lon1, lon2, lat1, lat2)

return(0)

def preprocess_small_timed_raster(infilename1, invarname1, intime1, outfilename1, outvarname1,lon1, lon2, lat1, lat2, roto):

tempfilename1="./process/temp00.nc"

tempfilename2="./process/temp01.nc"

loadnetcdf_timed_tofile(infilename1, invarname1, intime1, tempfilename1, outvarname1)

rotate_netcdf_360_to_180(tempfilename1, outvarname1,tempfilename2, outvarname1)

gdal_cut_tonc(tempfilename2,outfilename1 ,lon1, lon2, lat1, lat2)

return(0)

def rotate_netcdf_360_to_180(infilename1, invarname1,outfilename1, outvarname1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

pointsx1=[]

pointsy1=[]

gdal_get_nc_to_xyz_in_mem(infilename1, invarname1 )

lonsa=cache_lons1

latsa=cache_lats1

pointsz1=np.array(cache_z)

pointsx1=np.array(cache_x)

pointsy1=np.array(cache_y)

nlatsa1=len(latsa)

nlonsa1=len(lonsa)

pointsz3=np.reshape(pointsz1, (nlatsa1, nlonsa1))

rama1=int(len(lonsa)/2)

pointsz4=np.roll(pointsz3,rama1,axis=1)

lonsb=lonsa-180

save_points_to_netcdf(outfilename1, outvarname1, lonsb, latsa, pointsz4)

return(0)

def gdal_get_nc_to_xyz_in_mem(inname1, invar1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

imga=loadnetcdf_single_tomem(inname1, invar1)

#plt.imshow(imga)

lonsa=cache_lons1

latsa=cache_lats1

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

dim1=imga.shape

nlat1=len(latsa)

nlon1=len(lonsa)

#plt.plot(imga)

#plt.show()

#print(nlat1)

#print(nlon1)

#quit(-1)

#print(inname1)

#print (nlat1)

#quit(-1)

for iy in range(0,nlat1):

for ix in range(0,nlon1):

pz1=imga[iy,ix]

if (str(pz1) == '--'):

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(0)

else:

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(pz1)

#print(cache_z)

cache_lons1=lonsa

cache_lats1=latsa

#print (pz1)

return (cache_z)

def average_tables(daata1, daata2):

daata3=daata1

pitu=len(daata1)

for n in range(0,pitu):

daata3[n]=(daata1[n]+daata2[n])/2

return(daata3)

def add_scalar(daata, skalar):

pitu=len(daata)

for n in range(0,pitu):

daata[n]=daata[n]+skalar

return(daata)

def gam_multiple_vars( x1, y1, x2):

print ("GAM")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

#print (xa1)

- quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#sc = StandardScaler()

#xa = sc.fit_transform(x)

#xba = sc.transform(xb)

#model = LogisticGAM().fit(x, y)

model = LinearGAM().fit(x, y)

y2= model.predict(xb)

return(y2)

def random_forest_multiple_vars( x1, y1, x2):

print ("RF")

global RF_estimators1

global RF_features1

print(RF_estimators1, RF_features1)

#quit(-1)

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

#print (xa1)

- quit(-1)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

#degree=3

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#model=polyreg.fit(x,y)

sc = StandardScaler()

xa = sc.fit_transform(x)

xba = sc.transform(xb)

## orig

##model = RandomForestRegressor(n_estimators=10, max_features=2).fit(xa,y)

model = RandomForestRegressor(n_estimators=RF_estimators1, max_features=RF_features1).fit(xa,y)

y2= model.predict(xba)

return(y2)

def save_points_toxyz(filename, x,y,z):

print("Dummy function only")

return(0)

def linear_regression_multiple_vars( x1, y1, x2):

print ("MLR")

xa1=np.array(x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#model = LinearRegression().fit(x, y)

degree=3

polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

model=polyreg.fit(x,y)

y2= model.predict(xb)

return(y2)

def linear_regression_singe_var( x1, y1, x2):

#print (x1)

#print(y1)

#return(0)

#xa1=np.array(x1)

#ya1=np.array(y1)

#xa2=np.array(x2)

xa1=np.array( x1)

ya1=np.array(y1)

xa2=np.array(x2)

sha1=np.shape(x1)

dim2=sha1[1]

x=xa1.reshape((-1, dim2))

y=ya1.reshape((-1, 1))

xb=xa2.reshape((-1, dim2))

#x=xa1.reshape((-1, 1))

#y=ya1.reshape((-1, 1))

#xb=xa2.reshape((-1, 1))

#print (x)

#print (y)

model = LinearRegression().fit(x, y)

#model = RandomForestRegressor(n_estimators=10, max_features=2).fit(x,y)

#degree=2

#polyreg=make_pipeline(PolynomialFeatures(degree),LinearRegression())

#polyreg=make_pipeline(PolynomialFeatures(degree), )

#model=polyreg.fit(x,y)

## warning not xb

y2= model.predict(xb)

#print(y2)

#print("LR")

return(y2)

def save_points_to_netcdf(outfilename1, outvarname1, xoutlons1, xoutlats1, zoutvalue1):

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#print ("Save ...")

#print (nlat1)

#print (nlon1)

zoutvalue2=np.array(zoutvalue1).reshape(nlat1, nlon1)

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =zoutvalue2[:]

ncout1.close()

return 0

def gdal_cut_resize_fromnc_tonc(inname1, inname2, outname2, invar2,lon1, lon2, lat1, lat2):

imga=loadnetcdf_single_tomem(inname2, invar2)

dim1=imga.shape

height1=dim1[0]

width1=dim1[1]

print (width1)

print (height1)

jono1="gdalwarp -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+"-ts "+str(width1)+" "+str(height1)+ " " +inname1+" "+outname2

print(jono1)

os.system(jono1)

return

def gdal_get_points_from_file(inname1, invar1,pointsx1,pointsy1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

imga=loadnetcdf_single_tomem(inname1, invar1)

#plt.imshow(imga)

lonsa=cache_lons1

latsa=cache_lats1

cache_lons1=[]

cache_lats1=[]

cache_x=[]

cache_y=[]

cache_z=[]

cache_x2=[]

cache_y2=[]

cache_z2=[]

dim1=imga.shape

nlat1=dim1[0]

nlon1=dim1[1]

pitu=len(pointsx1)

#px1=10

#py1=45

for n in range(0,pitu):

px1=pointsx1[n]

py1=pointsy1[n]

#print('.')

for iy in range(0,nlat1):

if(py1>=latsa[iy]):

for ix in range(0,nlon1):

if(px1>=lonsa[ix]):

pz1=imga[iy,ix]

cache_x.append(lonsa[ix])

cache_y.append(latsa[iy])

cache_z.append(pz1)

#print(cache_z)

cache_lons1=lonsa

cache_lats1=latsa

#print (pz1)

return (cache_z)

def gdal_cut_resize_tonc(inname1, outname1, lon1, lon2, lat1, lat2, width1, height1):

#gdalwarp -te -5 41 10 51 -ts 1000 0 input.tif output.tif

jono1="gdalwarp -of netcdf -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+"-ts "+str(width1)+" "+str(height1)+ " " +inname1+" "+outname1

print(jono1)

os.system(jono1)

return

def interpolate_cache(x_min, y_min, x_max, y_max, reso1):

global cache_lons1

global cache_lats1

global cache_x

global cache_y

global cache_z

global cache_x2

global cache_y2

global cache_z2

cache_x2=[]

cache_y2=[]

cache_z2=[]

cache_lons1=[]

cache_lats1=[]

pitu1=len(cache_z)

raja1=pitu1

for i in range(0,raja1):

#print (i)

#print (cache_z[i])

try:

xx=cache_x[i]

yy=cache_y[i]

zz=cache_z[i]

if (str(zz) == '--'):

raja1=raja1-1

#print (zz)

#print(".")

else:

cache_x2.append(xx)

cache_y2.append(yy)

cache_z2.append(zz)

except IndexError:

print("BRK")

break

lonsn=(int(x_max-x_min)/reso1)

latsn=(int(y_max-y_min)/reso1)

cache_lons1=np.linspace(x_min, x_max, lonsn)

cache_lats1=np.linspace(y_min, y_max, latsn)

#print (cache_z2)

#print (cache_x2)

#exit(-1)

grid_x, grid_y = np.mgrid[x_min:x_max:reso1, y_min:y_max:reso1]

rbfi = Rbf(cache_x2, cache_y2, cache_z2)

di = rbfi(grid_x, grid_y)

#plt.figure(figsize=(15, 15))

#plt.imshow(di.T, origin="lower")

#cb = plt.scatter(df.x, df.y, s=60, c=df.por, edgecolor='#ffffff66')

#plt.colorbar(cb, shrink=0.67)

#plt.show()

return(di.T)

def makepoints(imgin1, lonsin1, latsin1):

global cache_x

global cache_y

global cache_z

cache_x=[]

cache_y=[]

cache_z=[]

dim1=imgin1.shape

nlat1=dim1[0]

nlon1=dim1[1]

k=0

for iy in range(0,nlat1):

for ix in range(0,nlon1):

zz=imgin1[iy,ix]

cache_x.append(lonsin1[ix])

cache_y.append(latsin1[iy])

if (str(zz) == '--'):

## warning there 0 append to equalize grid

cache_z.append(0)

k=1

else:

cache_z.append(zz)

#cache_z.append(imgin1[iy,ix])

return(0)

def gdal_reso(inname1, outname1, reso1):

jono1="gdalwarp -tr "+str(reso1)+" "+str(reso1)+" "+inname1+" "+outname1

os.system(jono1)

return(0)

def gdal_cut_tonc(inname1, outname1, lon1, lon2, lat1, lat2):

jono1="gdalwarp -te "+str(lon1)+" "+str(lat1)+" "+str(lon2)+" "+str(lat2)+" "+inname1+" "+outname1

print(jono1)

os.system(jono1)

return(0)

def savenetcdf_single_frommem(outfilename1, outvarname1, xoutvalue1,xoutlats1,xoutlons1):

nlat1=len(xoutlats1)

nlon1=len(xoutlons1)

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = xoutlats1

outlons1[:] = xoutlons1

outvalue1[:, :] =xoutvalue1[:]

ncout1.close()

return 0

def loadnetcdf_single_tomem(infilename1, invarname1):

global cache_lons1

global cache_lats1

print(infilename1)

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

cache_lons1=inlons1

cache_lats1=inlats1

indata1_set1 = inc1[invarname1][:]

dim1=indata1_set1.shape

nlat1=dim1[0]

nlon1=dim1[1]

inc1.close()

return (indata1_set1)

def loadnetcdf_single_tofile(infilename1, invarname1, outfilename1, outvarname1):

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

indata1_set1 = inc1[invarname1][:]

dim1=indata1_set1.shape

nlat1=dim1[0]

nlon1=dim1[1]

#indata_set1=indata1

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = inlats1

outlons1[:] = inlons1

outvalue1[:, :] = indata1_set1[:]

ncout1.close()

return 0

def loadnetcdf_timed_tofile(infilename1, invarname1, intime1, outfilename1, outvarname1):

inc1 = nc.Dataset(infilename1)

inlatname1="lat"

inlonname1="lon"

inlats1=inc1[inlatname1][:]

inlons1=inc1[inlonname1][:]

indata1 = inc1[invarname1][:]

dim1=indata1.shape

nlat1=dim1[1]

nlon1=dim1[2]

indata_set1=indata1[intime1]

#img01=indata_set1

#img1.replace(np.nan, 0, inplace=True)

#img1 = np.where(isna(img10), 0, img10)

#where_are_NaNs = np.isnan(img1)

#img1[where_are_NaNs] = 99

#img1 = np.where(np.isnan(img01), 0, img01)

ncout1 = nc.Dataset(outfilename1, 'w', format='NETCDF4')

outlat1 = ncout1.createDimension('lat', nlat1)

outlon1 = ncout1.createDimension('lon', nlon1)

outlats1 = ncout1.createVariable('lat', 'f4', ('lat',))

outlons1 = ncout1.createVariable('lon', 'f4', ('lon',))

outvalue1 = ncout1.createVariable(outvarname1, 'f4', ('lat', 'lon',))

outvalue1.units = 'Unknown'

outlats1[:] = inlats1

outlons1[:] = inlons1

outvalue1[:, :] = indata_set1

ncout1.close()

return 0

- downscaler funktzione !

- def downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, outfilenames1,soutfilenames1,invarnames1,outvarnames1, soutvarnames1):

def downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, invarnames1):

global DS_method

global DS_show

global DS_input_log

debug=0

#if(input_rasters==1):

# os.system("del *.nc")

outfilenames1=[]

outvarnames1=[]

soutfilenames1=[]

soutvarnames1=[]

huba0=len(infilenames1)

for n in range (0,huba0):

sandersson1=invarnames1[n]

outvarnames1.append(sandersson1)

soutvarnames1.append(sandersson1)

## big raster??

outfilenames1.append("./process/"+areaname+"_"+invarnames1[0]+".nc")

if(input_rasters==1):

#preprocess_small_timed_raster(infilenames1[0], invarnames1[0], intime1, outfilenames1[0], outvarnames1[0], lon1, lon2, lat1, lat2,roto)

preprocess_small_single_raster(infilenames1[0], invarnames1[0], outfilenames1[0], outvarnames1[0], lon1, lon2, lat1, lat2,roto)

#quit(-1)

huba=len(infilenames1)

for n in range (1,huba):

ofnamee="./process/"+areaname+"_"+outvarnames1[n]+"_"+str(n)+".nc"

sofnamee="./process/"+areaname+"_"+outvarnames1[n]+"_"+str(n)+"_s.nc"

print(ofnamee)

print(sofnamee)

outfilenames1.append(ofnamee)

soutfilenames1.append(sofnamee)

if(input_rasters==1):

print("PP ",infilenames1[n])

preprocess_big_raster(infilenames1[n], invarnames1[n], outfilenames1[0], outfilenames1[n], soutfilenames1[n-1], outvarnames1[n], lon1, lon2, lat1, lat2, width1, height1, roto)

imgs=[]

pointsx=[]

pointsy=[]

pointsz=[]

mlats=[]

mlons=[]

spointsx=[]

spointsy=[]

spointsz=[]

simgs=[]

slats=[]

slons=[]

len1=len(outfilenames1)

for n in range(0,len1):

imgs.append(loadnetcdf_single_tomem(outfilenames1[n], "Band1"))

mlons.append(cache_lons1)

mlats.append(cache_lats1)

makepoints(imgs[n], mlons[n], mlats[n])

pointsx.append(cache_x)

pointsy.append(cache_y)

pointsz.append(cache_z)

len1=len(soutfilenames1)

for n in range(0,len1):

simgs.append(loadnetcdf_single_tomem(soutfilenames1[n], "Band1"))

slons.append(cache_lons1)

slats.append(cache_lats1)

makepoints(simgs[n], slons[n], slats[n])

spointsx.append(cache_x)

spointsy.append(cache_y)

spointsz.append(cache_z)

if(debug==1):

print("Specie habitation.")

load_xy("humanlgm.txt","Lon", "Lat")

klats1=cache_lats1

klons1=cache_lons1

spointszout=[]

ppointsx=[]

ppointsy=[]

ppointsz=[]

gdal_get_points_from_file(outfilenames1[1], invarnames1[1], klons1, klats1)

ppointsx.append(cache_x)

ppointsy.append(cache_y)

ppointsz.append(cache_z)

gdal_get_points_from_file(outfilenames1[2], invarnames1[2], klons1, klats1)

ppointsx.append(cache_x)

ppointsy.append(cache_y)

ppointsz.append(cache_z)

#gdal_get_points_from_file(outfilenames1[2], invarnames1[2], klons1, klats1)

#ppointsx.append(cache_x)

#ppointsy.append(cache_y)

#ppointsz.append(cache_z)

#bpoints1=ppointsz[0]

#apoints1=ppointsz[0]

#cpoints1=spointsz[0]

bpoints1=ppointsz[0]

apoints1=ppointsz[0]

cpoints1=spointsz[0]

spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

bpoints1=ppointsz[1]

apoints1=ppointsz[1]

cpoints1=spointsz[1]

spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

#bpoints1=ppointsz[2]

#apoints1=ppointsz[2]

#cpoints1=spointsz[2]

#spointszout.append(probability_single_var(apoints1, bpoints1, cpoints1))

odex1=0

sdataout=spointszout[0]*spointszout[1]

pointsxout1=spointsx[0]

pointsyout1=spointsy[0]

slonsout1=slons[0]

slatsout1=slats[0]

save_points_to_netcdf(sresultfilename1, sresultvarname1, slonsout1, slatsout1, sdataout)

plt.imshow( np.array(sdataout).reshape(len(slatsout1), len(slonsout1) ) )

plt.show()

return(1)

## main sektion of ds

sla=[]

len1=len(pointsz)

for n in range(1,len1):

sla.append(pointsz[n])

slb=[]

for n in range(0,len1-1):

print (n)

slb.append(spointsz[n])

apoints1=list(zip(*sla))

bpoints1=pointsz[0]

cpoints1=list(zip(*slb))

spointszout=[]

#if(DS_input_log==0):

#if(DS_input_log==1):

# spointszout.append(np.exp(random_forest_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

# spointszout.append(np.exp(gam_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

# spointszout.append(np.exp(linear_regression_multiple_vars(apoints1, np.log(bpoints1), cpoints1)))

#sdataout=average_tables(spointszout[0],spointszout[1])

if(DS_method==0):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==1):

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==2):

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

sdataout=spointszout[0]

if(DS_method==77):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

#sdataout=average_tables(spointszout[0],spointszout[1] )

#sdataout=average_tables(spointszout[0],spointszout[1], spointszout[2])

sdataout1=average_tables(spointszout[0],spointszout[1] )

sdataout2=average_tables(spointszout[1],spointszout[2] )

sdataout=average_tables(sdataout1, sdataout2 )

if(DS_method==88):

spointszout.append(random_forest_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(gam_multiple_vars(apoints1, bpoints1, cpoints1))

spointszout.append(linear_regression_multiple_vars(apoints1, bpoints1, cpoints1))

#sdataout=average_tables(spointszout[0],spointszout[1] )

#sdataout=average_tables(spointszout[0],spointszout[1], spointszout[2])

sdataout1=average_tables(spointszout[0],spointszout[1] )

sdataout2=average_tables(10*spointszout[1],1*spointszout[2]/10 )/10

sdataout=average_tables(sdataout1, sdataout2 )

pointsxout1=spointsx[0]

pointsyout1=spointsy[0]

slonsout1=slons[0]

slatsout1=slats[0]

save_points_to_netcdf(sresultfilename1, sresultvarname1, slonsout1, slatsout1, sdataout)

#plt.register_cmap(name='viridis', cmap=cmaps.viridis)

#plt.set_cmap(cm.viridis)

#cmaps = OrderedDict()

#kolormap='jet'

#kolormap='Spectral_r'

#kolormap='gist_rainbow_r'

#kolormap='BrBG'

#kolormap='rainbow'

kolormap='viridis_r'

if(DS_show==1):

plt.imshow(np.array(sdataout).reshape(len(slatsout1), len(slonsout1)) , cmap=kolormap)

plt.ylim(0, len(slatsout1))

plt.show()

return(0)

- attempt to post process rain in mountains!

- POST PROCESSORI warning experiment only

def manipulate_rainfall_data(demname, rainname, oname2):

# try to exaggerate rainfall in mountains

dem1=loadnetcdf_single_tomem(demname,"Band1")

rain0=loadnetcdf_single_tomem(rainname,"Rain")

rain1=np.flipud(rain0)

shape1=np.shape(rain1)

print(shape1)

dem2=np.ravel(dem1)

rain2=np.ravel(rain1)

len1=len(rain2)

#print (len1)

outta1=rain2*0

limith1=1200

for n in range(0,len1):

r=rain2[n]

o=r

d=dem2[n]

rk=d/limith1

if (d>limith1):

o=r*rk

outta1[n]=o

out00=np.reshape(outta1,shape1)

#out1=np.flipud(out00)

out1=out00

savenetcdf_single_frommem(oname2, "Rain", out1,cache_lats1, cache_lons1)

return(out1)

def match_raster(iname,matchname, oname):

loadnetcdf_single_tomem(iname, "Band1")

lon1=np.min(cache_lons1)

lon2=np.max(cache_lons1)

lat1=np.min(cache_lats1)

lat2=np.max(cache_lats1)

gdal_cut_resize_fromnc_tonc(iname, matchname, oname, "Rain",lon1, lon2, lat1, lat2)

- attempt to post process rain in mountains!

- POST PROCESSORI warning experiment 2 only

- enhances big rainfalls, diminishes small rainfalls

- based on y=kx+b

def manipulate_rainfall_data_2(dname1, dname2, oname2, ofset1, ofset2, k1):

# try to exaggerate rainfall in mountains

dat10=loadnetcdf_single_tomem(dname1,"Band1")

dat20=loadnetcdf_single_tomem(dname2,"Rain")

shape1=np.shape(dat10)

dee1=np.ravel(dat10)

dee2=np.ravel(dat20)

len1=len(dee1)

len2=len(dee2)

outta1=dee2*0

a=ofset1

b=ofset2

k=k1

for n in range(0,len1):

d1=dee1[n]

d2=dee2[n]

o0=(d1+d2)/2

o1=o0+b

o2=(o1-a)*k

o3=o2+a

outta1[n]=o3

out00=np.reshape(outta1,shape1)

#out1=np.flipud(out00)

out1=out00

if(DS_show==1):

kolormap='viridis_r'

plt.imshow(out1, cmap=kolormap)

#plt.ylim(0, len(slatsout1))

plt.show()

savenetcdf_single_frommem(oname2, "Rain", out1,cache_lats1, cache_lons1)

return(out1)

- main program

input_rasters=1

debug=0 ## human habitation test

- acquire basic data, loadfiles=1

loadfiles=1

intime1=1

- lon1=-4.5

- lon2=10.0

- lat1=42.0

- lat2=50.5

lon1=-180

lon2=180

lat1=-90

lat2=90

width1=640

height1=320

- jn

- width1=112

- height1=130

- JN WARNING

- width1=200

- height1=200

roto=1

areaname="planet"

sresultfilename1="./output/dskaled1.nc"

sresultvarname1="atm_temp"

infilenames1=[]

invarnames1=[]

infilenames1.append('atm_temp.nc')

- infilenames1.append('./indata/Map19_PALEOMAP_1deg_Late_Cretaceous_80Ma.nc')

infilenames1.append('dem.nc')

infilenames1.append('lons.nc')

infilenames1.append('lats.nc')

- infilenames1.append('./process/accu_dem_1.nc')

- infilenames1.append('./process/accu_windir.nc')

invarnames1.append("atm_temp")

invarnames1.append("Band1")

invarnames1.append("Band1")

invarnames1.append("Band1")

RF_estimators1=100

- RF_features1=4

RF_features1=1

DS_method=2

DS_show=1

DS_input_log=0

downscale_data_1(input_rasters,loadfiles,intime1,lon1,lon2,lat1,lat2,width1,height1,roto,areaname,sresultfilename1,sresultvarname1,infilenames1, invarnames1)

print(".")

quit(-1)

Post-process dscaler output

library(raster)

library(ncdf4)

library(rgdal)

r1=raster("./output/dskaled1.nc")

crs1<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

- ext1<- extent(0, 360, -90, 90)

ext1<- extent(-180, 180, -90, 90)

extent(r1) <- ext1

str(r1)

ur1<-r1

- ur2<-rotate(r1)

- extent(ur2) <- ext1

- sabluna1<-raster(nrows=360, ncols=360, xmn=0, xmx=180, ymn=-90.5, ymx=90.5)

- sabluna1<-raster(nrows=360, ncols=360)

- extent(sabluna1) <- ext1

- ur1<-resample(ur2,sabluna1)

- extent(ur1) <- ext1

- image(ur1)

crs(ur1)<-"+proj=longlat +datum=WGS84 +no_defs +ellps=WGS84 +towgs84=0,0,0"

writeRaster(ur1, "./output/dskaled2.nc", overwrite=TRUE, format="CDF", varname="T", varunit="degC",

longname="T", xname="lon", yname="lat")

Licensing

[edit]I, the copyright holder of this work, hereby publish it under the following license:

This file is licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

This file is licensed under the Creative Commons Attribution-Share Alike 4.0 International license.

- You are free:

- to share – to copy, distribute and transmit the work

- to remix – to adapt the work

- Under the following conditions:

- attribution – You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

- share alike – If you remix, transform, or build upon the material, you must distribute your contributions under the same or compatible license as the original.

https://creativecommons.org/licenses/by-sa/4.0CC BY-SA 4.0 Creative Commons Attribution-Share Alike 4.0 truetrueFile history

Click on a date/time to view the file as it appeared at that time.

| Date/Time | Thumbnail | Dimensions | User | Comment | |

|---|---|---|---|---|---|

| current | 19:47, 5 January 2022 |  | 2,192 × 1,472 (284 KB) | Merikanto (talk | contribs) | update |

| 14:42, 5 January 2022 |  | 2,048 × 1,360 (486 KB) | Merikanto (talk | contribs) | Uploaded own work with UploadWizard |

You cannot overwrite this file.

File usage on Commons

There are no pages that use this file.